Introduction

AutoRob is an introduction to the computational foundations of autonomous robotics for programming modern mobile manipulation systems. AutoRob covers fundamental concepts in autonomous robotics for the kinematic modeling of arbitrary open-chain articulated robots and algorithmic reasoning for autonomous path and motion planning, and brief coverage of dynamics and motion control. These core concepts are contextualized through their instantiation in modern robot operating systems, such as ROS and LCM. AutoRob covers some of the fundamental concepts in computing, common to a second semester data structures course, in the context of robot reasoning, but without analysis of computational complexity. The AutoRob learning objectives are geared to ensure students completing the course are fluent programmers capable of computational thought and can develop full-stack mobile manipulation software systems.

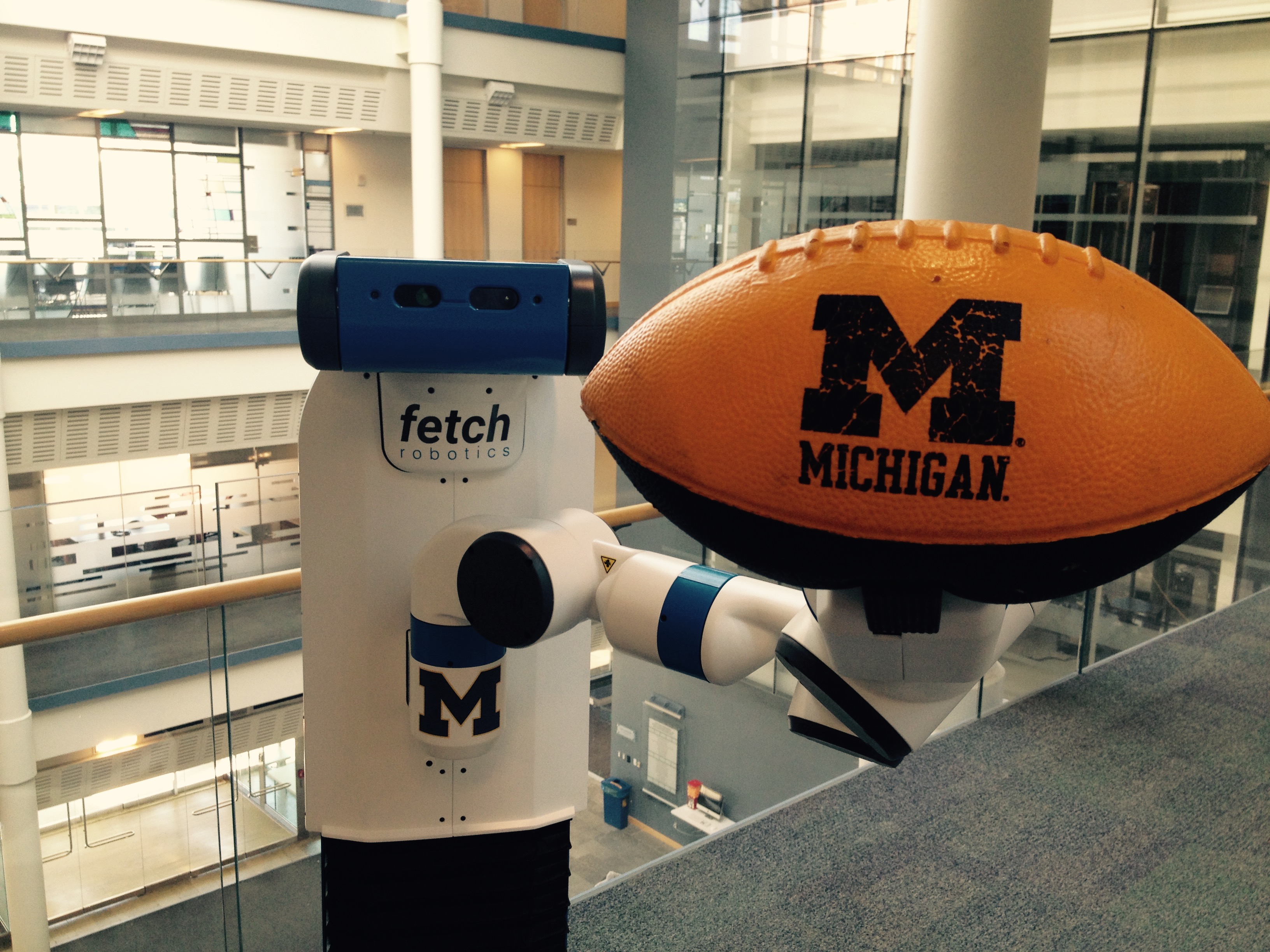

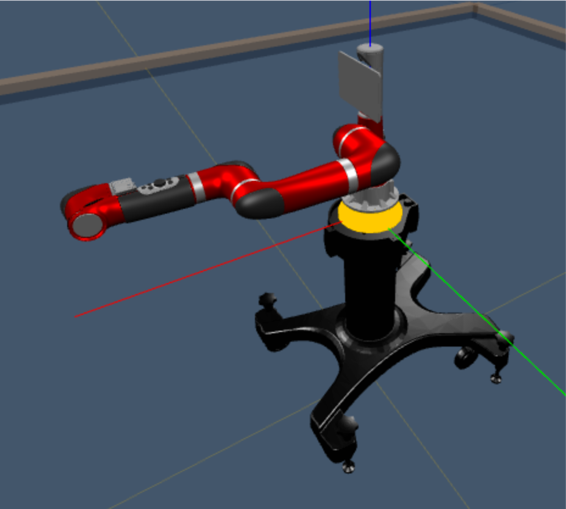

The AutoRob course can be thought of as an exploration into the foundation for reasoning and computation by autonomous robots capable of mobility and dexterity. That is, given a robot as a machine with sensing, actuation, and computation, how do we build computational models, algorithms, software implementations that allow the robot to function autonomously, especially for pick-and-place tasks? Such computation involves functions for robots to perceive the world (as covered in Robotics 330, EECS 467, EECS 442, or EECS 542), make decisions towards achieving a given objective (this class as well as EECS 492), transforming action into motor commands (as covered in Robotics 310, Robotics 311, or EECS 367), and usably working with human users (as covered in Robotics 340). Computationally, these functions form the basis of the sense-plan-act paradigm that defines the discipline of robotics as the study of embodied intelligence, as described by Brooks. Embodied intelligence allows for understanding and extending concepts essential for modern robotics, especially mobile manipulators such as the pictured Fetch robot.

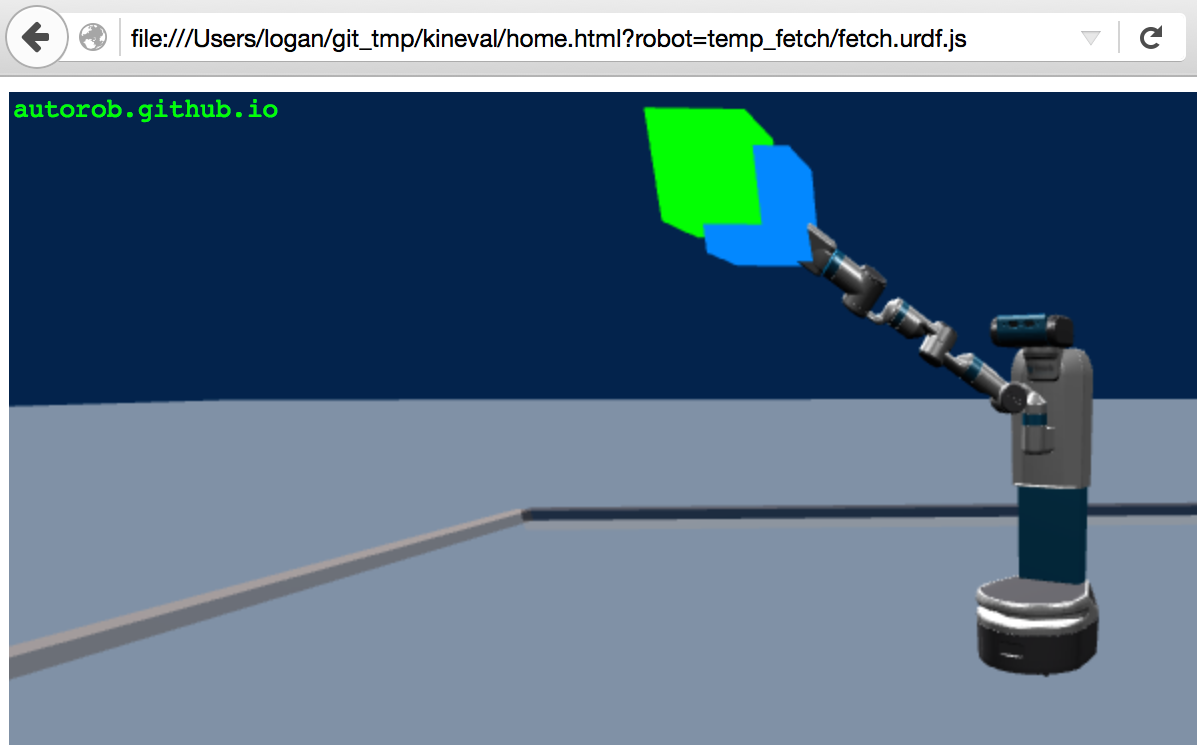

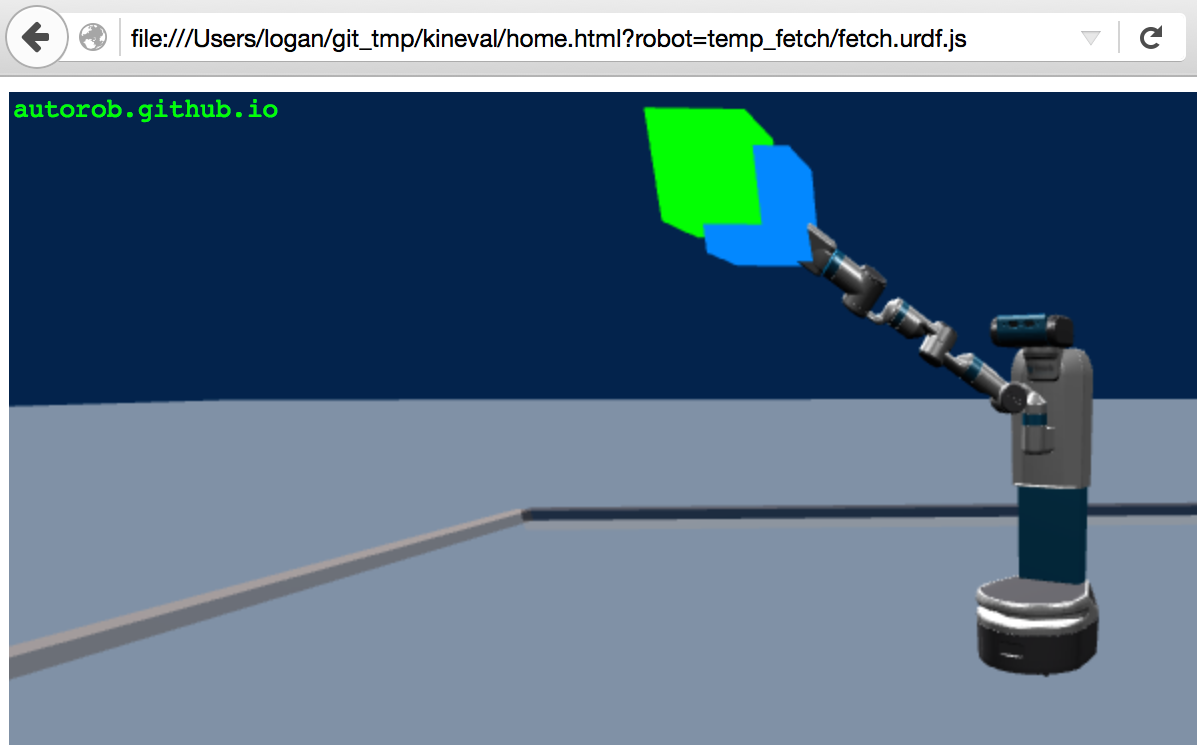

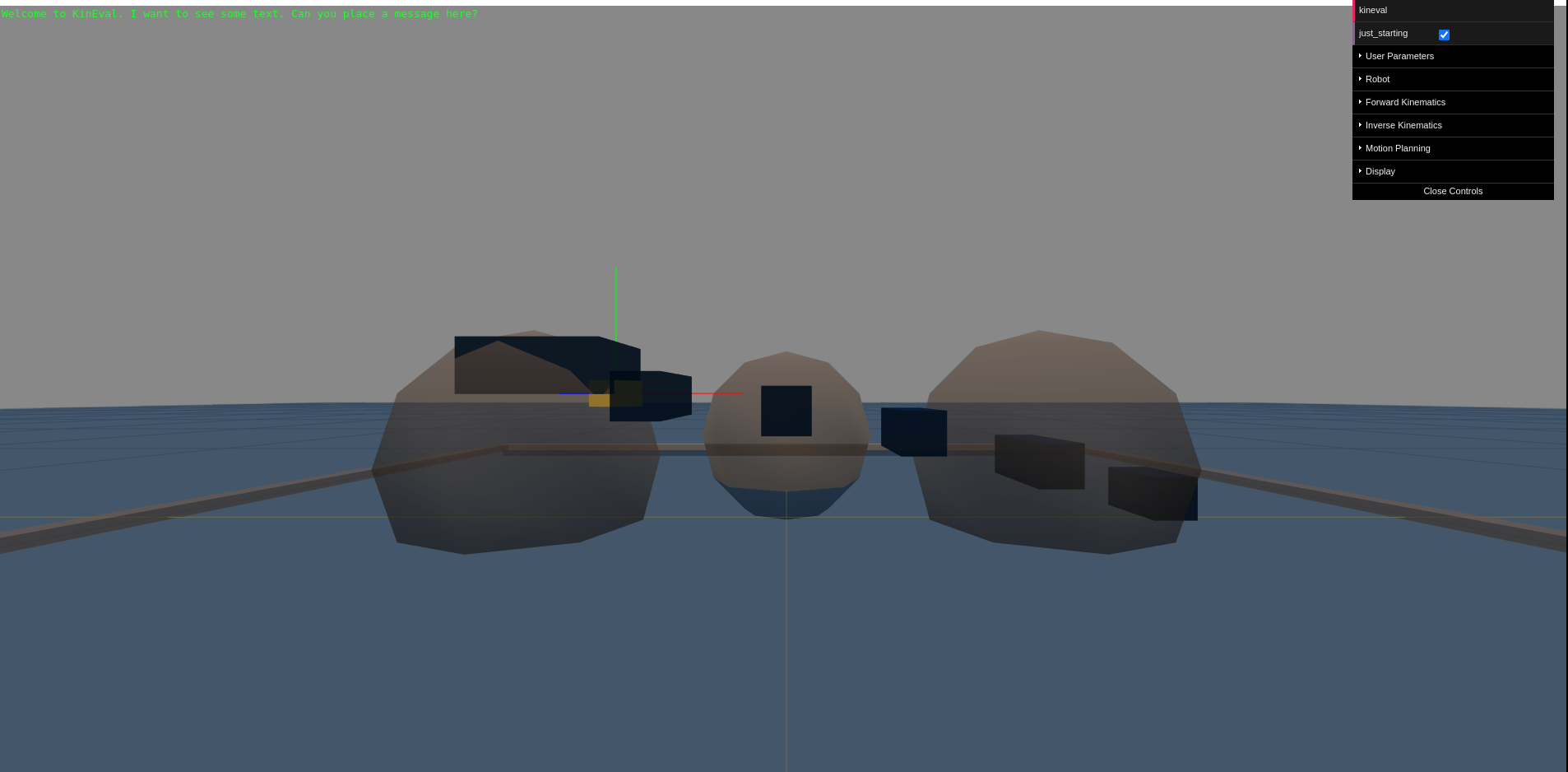

AutoRob projects ground course concepts through implementation in JavaScript/HTML5 supported by the KinEval code stencil (snapshot below from Mozilla Firefox), as well as tutorials for the ROS robot operating system and the rosbridge robot messaging protocol. These projects will coverrobot middleware architectures and publish-subscribe messaging models, graph search path planning (A* algorithm), basic physical simulation (Lagrangian dynamics, numerical integrators), proportional-integral-derivative (PID) control, forward kinematics (3D geometric matrix transforms, matrix stack composition of transforms, axis-angle rotation by quaternions), inverse kinematics (gradient descent optimization, geometric Jacobian), and motion planning (simple collision detection, sampling-based motion planning). Additional topics that could be covered include network socket programming, JSON object parsing, potential field navigation, Cyclic Coordinate Descent, Newton-Euler dynamics, task and mission planning, Bayesian filtering, and Monte Carlo localization.

KinEval code stencil and programming framework

AutoRob projects will use the KinEval code stencil that roughly follows conventions and structures from the Robot Operating System (ROS) and Robot Web Tools (RWT) software frameworks, as widely used across robotics. These conventions include the URDF kinematic modeling format, ROS topic structure, and the rosbridge protocol for JSON-based messaging. KinEval uses threejs for in-browser 3D rendering. Projects also make use of the Numeric Javascript external library (or math.js) for select matrix routines, although other math support libraries are being explored. Auxiliary code examples and stencils will often use the jsfiddle development environment.

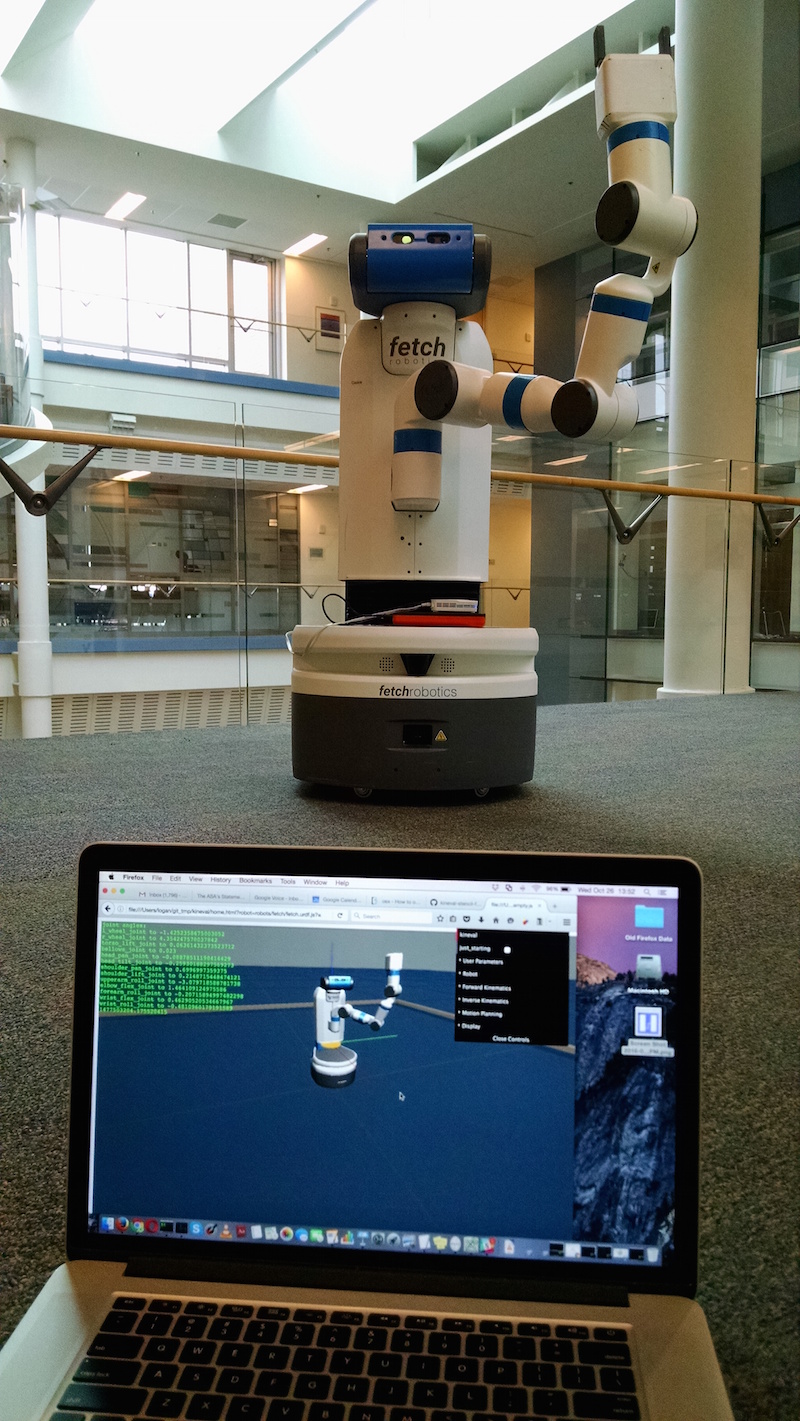

You will use an actual robot (at least once)! While AutoRob projects will be mostly in simulation, KinEval allows for your code to work with any robot that supports the rosbridge protocol, which includes any robot running ROS. Given a URDF description, the code you produce for AutoRob will allow you to view and control the motion of any mobile manipulation robot with rigid links. Your code will also be able to access the sensors and other software services of the robot for your continued work as a roboticist. |

Related Courses

In AutoRob, coding is believing. AutoRob is a computing-friendly pathway into robotics. The course aims to provide broad exposure to autonomous robotics, but it does not cover the whole of robotics. The scope of AutoRob is introductory kinematic modeling and reasoning.

AutoRob is well-suited as preparation for a Major Design Experience, such as in EECS 467 (Autonomous Robotics Laboratory). In addition to AutoRob, EECS 467 and ROB 550 (Robotics Systems Laboratory) provide more extensive hands-on experience with a small set of real robotic platforms. In contrast, AutoRob emphasizes the creation of a general kinematics and planning software stack in simulation, with interfaces to work with a diversity of real robots.

AutoRob complements courses covering computational perception, including autonomous navigation in Robotics 330 and EECS 467, probabilistic inference in ROB 530 Mobile Robotics, EECS 442 Computer Vision, and deep learning in EECS 498 Deep Learning for Computer Vision and ROB 498 Deep Learning for Robot Perception. Robots can't work if they can't perceive their environment.

Inference methods covered in AutoRob are complementary to artificial intelligence courses, such as EECS 492 (Introduction to Artificial Intelligence), EECS 445 (Machine Learning), and EECS 595 (Natural Language Processing).

AutoRob is an excellent complement to courses in embedded systems (EECS 373) and advanced topics in embedded system design (EECS 473), as well as courses in sensorimotor control (EECS 460, EECS 461, ME 461). AutoRob and embedded systems perform synergistic functions necessary for a control loop on an autonomous robot. AutoRob is about reasoning from data provided by embedded systems on a robot to compute a control command that is then executed by an embedded system to produce motion.

AutoRob is a computation-focused alternative to ME 567/ROB 510 (Robot Kinematics and Dynamics). ME 567 is a more in-depth mathematical analysis of dynamics and control with extensive use of Denavit-Hartenberg parameters for kinematics. AutoRob has a greater emphasis on algorithmic methods for autonomous path and motion planning for high degree-of-freedom robots and mobile manipulators, along with use of quaternions and matrix stacks for kinematics.

AutoRob is a viable precursor for courses that go deeper into theoretical abstractions in algorithmic robotics (ROB 422) and advanced topics in motion planning (ROB 520).

AutoRob can be taken in parallel with ROB 502 (Programming for Robotics). AutoRob makes some accommodations for students with less programming experience than provided in common data structures courses, such as EECS 280. However, for students new to computer programming, it is highly recommended to take AutoRob after ROB 502, EECS 280, or EECS 402.

Commitment to equal opportunity

We expected that all students to treat each other with respect. As indicated in the General Standards of Conduct for Engineering Students, this course is committed to a policy of equal opportunity for all persons and does not discriminate on the basis of race, color, national origin, age, marital status, sex, sexual orientation, gender identity, gender expression, disability, religion, height, weight, or veteran status. Please feel free to contact the course staff with any problem, concern, or suggestion. The University of Michigan Statement of Student Rights and Responsibilities provides greater detail about expected behavior and conflict resolution in our community of scholarship.

Accommodations for Students with Disabilities

If you believe an accommodation is needed for a disability, please let the course instructor know at your earliest convenience. Some aspects of this course, including the assignments, the in-class activities, and the way the course is usually taught, may be modified to facilitate your participation and progress. As soon as you make us aware of your needs, the course staff can work with the Services for Students with Disabilities (SSD, 734-763-3000) office to help us determine appropriate academic accommodations. SSD typically recommends accommodations through a Verified Individualized Services and Accommodations (VISA) form. Any information you provide is private and confidential and will be treated as such. For special accommodations for any academic evaluation (exam, quiz, project), the course staff will need to receive the necessary paperwork issued from the SSD office by January 26, 2024.

Student mental health and well being

The University of Michigan is committed to advancing the mental health and wellbeing of its students. If you or someone you know is feeling overwhelmed, depressed, and/or in need of support, services are available. For help, please contact one of the many resources offered by the University that are committed helping students through challenging situations, including: U-M Psychiatric Emergency (734-996-4747, 24-hour), Counseling and Psychological Services (CAPS, 734-764-8312, 24-hour), and the C.A.R.E. Center on North Campus. You may also consult the University Health Service (UHS, 734-764-8320) as well as its services for alcohol or drug concerns.

Office Hours Calendar

calendar link

//Course Staff and Office Hours

Course Instructors

|

Chad Jenkins

|

|

Jamie Berger

|

Graduate Student Instructors | |

|

Boyang Wang

|

Instructional Aides | |

|

Tianyuan Du

|

|

Yufeiyang Gao

|

|

Hanzhe Guo

|

|

Haoran Zhang

|

Winter 2024 Course Structure

This semester, the AutoRob course is offered in a synchronous in-person format across a number of sections this semester: two undergraduate in-person sections (Robotics 380 and EECS 367) and an in-person graduate section (Robotics 511).

Course Meetings:

Monday 4:30-7:20pm Eastern, Robotics Building 1060

The first half of course meetings will be dedicated to an synchrononous in-person lecture, as listed on the course schedule. The second half of course meetings will focus on interactive activities, such as extended office hours, addressing general questions and comments regarding course concepts, and project-related tutorials and supplementary activities.

Student attendance is expected and synchronous for all course meetings at the scheduled location (Robotics Building 1060 ).

Laboratory Sections

Friday 2:30-4:20pm Eastern, Robotics Building 1060

Lab sections will provide guidance through the workflow of course projects. This sections are primarily intended for students enrolled in the undergraduate sections. For Winter 2024, attendance at lab sections is optional

The AutoRob office hours queue hosted by EECS will be used to manage queueing for course office hours.

Discussion Services

Piazza

The AutoRob Course Piazza workspace will be the primary service for course-related discussion threads and announcements.

Slack

The AutoRob Winter 2024 workspace hosted by the U-M Enterprise Slack server will be used for optional course-related discussions. Slack is a cloud-hosted online discussion and collaboration system with functionality that resembles Internet Relay Chat (IRC). Slack clients are available for most modern operating systems as well as through the web. Slack is FERPA compliant.

Actively engaging in course discussions is a great way to become a better roboticist.

Prerequisites

This course has recommended prerequisites of "Linear Algebra" and "Data Structures and Algorithms", or permission from the instructor.

Programming proficiency: EECS 280 (Programming and Introductory Data Structures) EECS 402 (Programming for Scientists and Engineers), ROB 502 (Programming for Robotics) or proficiency in data structures and algorithms should provide an adequate programming background for the projects in this course. Interested students should consult with the course instructor if they have not taken EECS 281, EECS 402, ROB 502, or its equivalent, but have some other notable programming experience. EECS 281 (Data Structures and Algorithms) is a required prerequisite for EECS 367, an Upper-Level CS elective.

Mathematical proficiency: Math 214, 217, 417, 419 or proficiency in linear algebra should provide an adequate mathematical background for the projects in this course. Interested students should consult with the course instructor if they have not taken one of the listed courses or their equivalent, but have some other strong background with linear algebra.

Recommended optional proficiency: Differential equations, Computer graphics, Computer vision, Artificial intelligence

The instructor will do their best to cover the necessary prerequisite material, but no guarantees. Linear algebra will be used extensively in relation to 3D geometric transforms and systems of linear equations. Computer graphics is helpful for under-the-hood understanding of threejs. Computer vision and AI share common concepts with this course. Differential equations are used to cover modeling of motion dynamics and inverse kinematics, but not explicitly required.

Textbook

AutoRob is compatible with both the Spong et al. and Corke textbooks (listed below), although only one of these books is needed. Depending on individual styles of learning, one textbook may be preferable over the other. Spong et al. is the listed required textbook for AutoRob and is supplemented with additional handouts. The Corke textbook provides broader coverage with an emphasis on intuitive explanation. A pointer to the Lynch and Park textbook is provided for an alternative perspective on robot kinematics that goes deeper into spatial transforms in exponential coordinates. Lynch and Park also provides some discussion and context for using ROS. This semester, AutoRob will not officially support the Lynch and Park book, but will make every effort to work with students interested in using this text.

Robot Modeling and Control

Mark W. Spong, Seth Hutchinson, and M. Vidyasagar

Wiley, 2005

Available at Amazon

Alternate textbooks

Robotics, Vision and Control: Fundamental Algorithms in MATLAB

Peter Corke

Springer, 2011

Modern Robotics: Mechanics, Planning, and Control

Kevin M. Lynch, Frank C. Park

Cambridge University Press, 2017

Optional texts

JavaScript: The Good Parts

Douglas Crockford

O'Reilly Media / Yahoo Press, 2008

Principles of Robot Motion

Howie Choset, Kevin M. Lynch, Seth Hutchinson, George A. Kantor, Wolfram Burgard, Lydia E. Kavraki, and Sebastian Thrun

MIT Press, 2005

Projects and Grading

The AutoRob course will assign 7 projects (6 programming, 1 oral) and 4 quizzes across all sections of the course. Students in the undergraduate sections will have 4 homework assignments. Students in the graduate section will have advanced extension opportunities.

Each project has been decomposed into a collection of features, each of which is worth a specified number of points. AutoRob project features are graded as "checked" (completed) or "due" (incomplete). Prior to its due date, the grading status of each feature will be in the "pending" state. In terms of workload, each project is expected to take approximately 4 hours of work on average (as a rough estimate from the EECS Workload Survey).

Each quiz will consist of a short set of questions administered synchronously in class. Quiz questions will be within the scope of previously covered lectures and graded projects. Quiz questions will focus on project material and should be readily answerable given knowledge from correctly completing projects on time.

Individual final grades are assigned based on the sum of points earned from coursework (detailed in subsections below). The timing and due dates for course projects and quizzes will be announced on an ongoing basis. The official due date of a project is listed with its project description, such as for Assignment 1: Path Planning. Due dates listed in the course schedule are tentative. All project work must be checked by the final grading deadline to receive credit.

ROB 380/EECS 367: Introduction to Autonomous Robotics

In the undergraduate sections, each fully completed project is weighted as 10 points. The first three quizzes are weighted as 4 points. The final quiz is weighted as 10 points. Four homework assignments will be given to this section, with each weighted as 2 points.

Based on this sum of points from completed coursework, an overall grade for the course is earned as follows: An "A" grade in the course is earned if graded coursework sums to 93 points or above; a "B" grade in the course is earned if graded coursework sums to 83 points or above; a "C" grade in the course is earned if graded coursework sums to 73 points or above. The instructor reserves the option to assign appropriate course grades with plus or minus modifiers.

Students can access the course gradescope portal to access and complete homework assignments.

Students in the undergraduate section have the opportunity to earn 2 extra credit points through optional extensions of the course projects. Extension points are limited to 3 total over the course of the semester, and are limited to the Base Offset transform feature for Forward Kinematics, URDF/FSM Dance Showcase for Project 4 and the IK100in60 extension for Inverse Kinematics.

ROB 511: Mobile Manipulation Systems

In the graduate section, each fully completed project is weighted as 16 points. The first three quizzes are weighted as 4 points. The final quiz is weighted as 10 points. Advanced extensions can be completed to earn up to 10 points. Examples of advanced extensions include implementation of an LU solver for linear systems of equations, inverse kinematics by Cyclic Coordinate Descent, one additional motion planning algorithm, and maximal coordinate simulation of an articulated robot. Advanced extensions are due by the course final grading deadline and do not need to be completed for the deadlines of each assignment.

Based on this sum of points from completed coursework, an overall grade for the course is earned as follows: An "A" grade in the course is earned if graded coursework sums to 130 points or above; a "B" grade in the course is earned if graded coursework sums to 115 points or above; a "C" grade in the course is earned if graded coursework sums to 100 points or above. The instructor reserves the option to assign appropriate course grades with plus or minus modifiers.

Project Rubrics (tentative and subject to change)

The following project features are planned for AutoRob this semester. Students are expected to complete all features.

| Assignment 1: 2D Path Planning | ||

| 4 | All | Heap implementation |

| 6 | All | A-star search |

| 2 | 511 | BFS |

| 2 | 511 | DFS |

| 2 | 511 | Greedy best-first |

| Assignment 2: Pendularm | ||

| 3 | All | Euler integrator |

| 3 | All | Velocity Verlet integrator |

| 4 | All | PID control |

| 2 | 511 | Verlet integrator |

| 2 | 511 | RK4 integrator |

| 2 | 511 | Double pendulum |

| Assignment 3: Forward Kinematics | ||

| 2 | All | Core matrix routines |

| 5 | All | FK transforms |

| 1 | All | Joint selection/rendering |

| 2 | All | New robot definition |

| 2 | 511 | Base offset transform |

| 4 | 511 | Fetch rosbridge interface (due before final grading deadline) |

| Assignment 4: Dance Controller | ||

| 6 | All | Quaternion joint rotation |

| 1 | All | Interactive base control |

| 1 | All | Pose setpoint controller |

| 2 | All | Dance FSM |

| 3 | Ext | Joint limits |

| 3 | 511 | Prismatic joints |

| Assignment 5: Inverse Kinematics | ||

| 6 | All | Manipulator Jacobian |

| 4 | All | Gradient descent with Jacobian transpose |

| 3 | 511 | Jacobian pseudoinverse |

| 3 | 511 | Euler angle conversion |

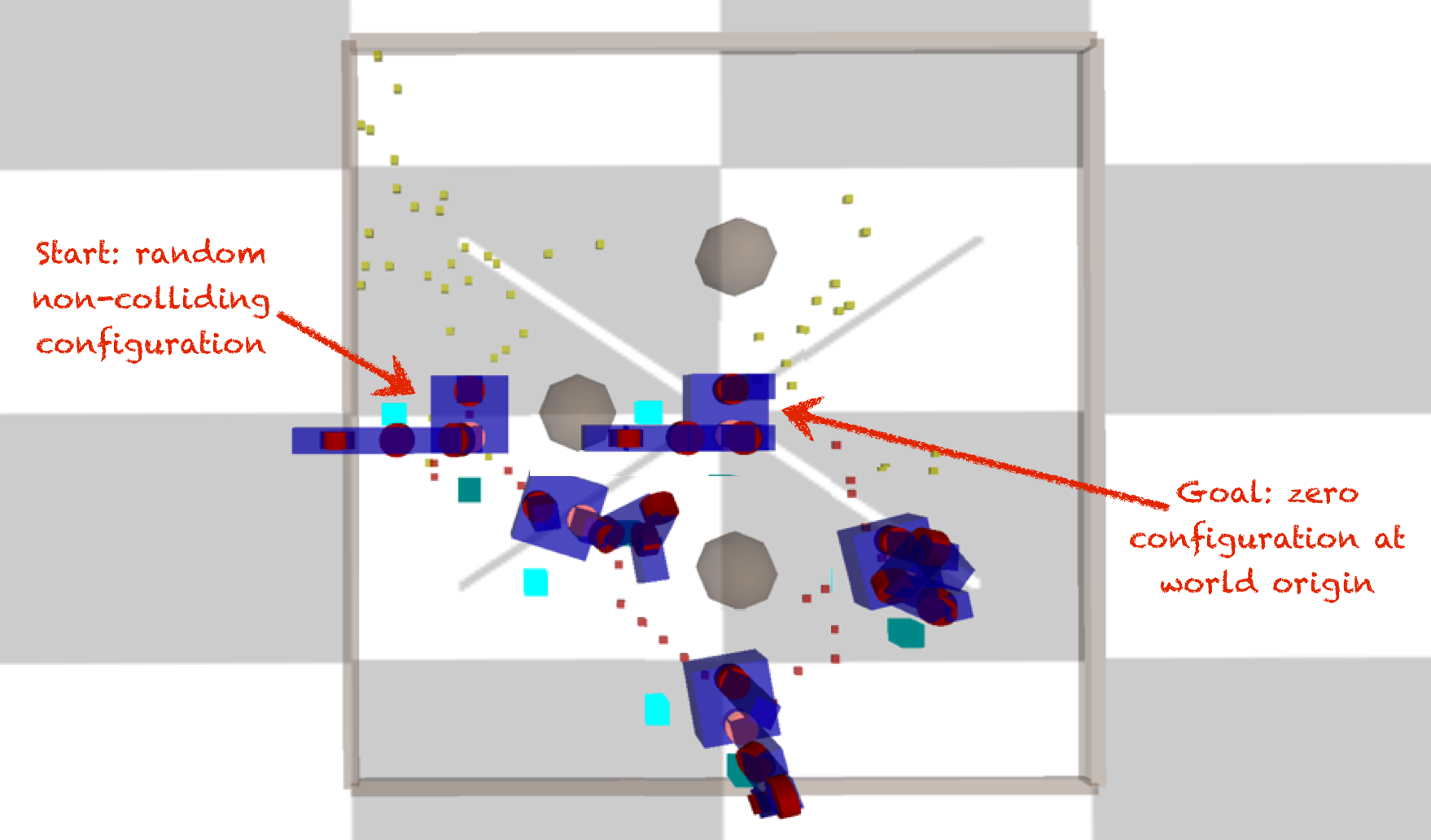

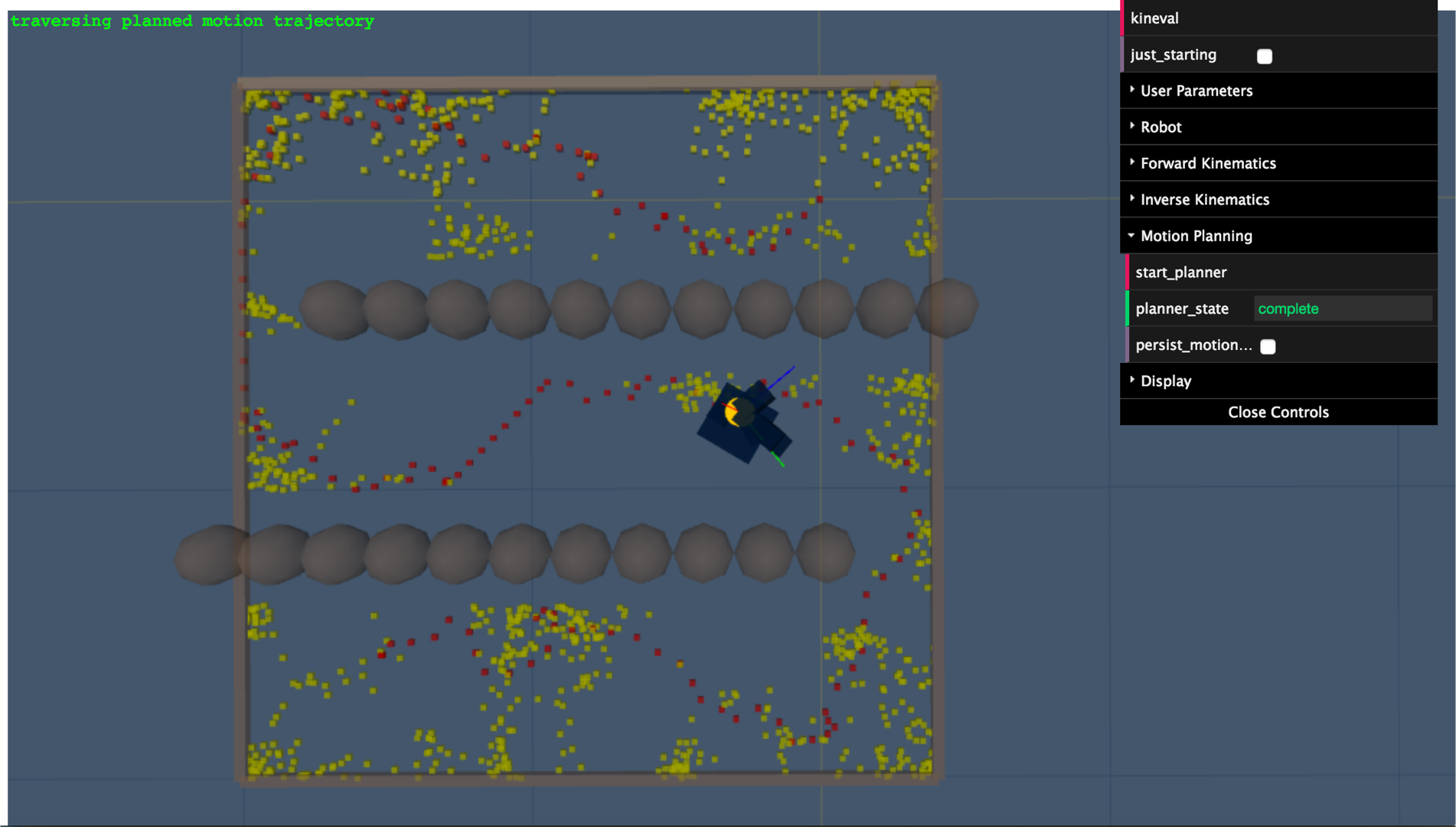

| Assignment 6: Motion Planning | ||

| 4 | All | 2D RRT-Connect |

| 2 | All | Robot Collision detection |

| 4 | All | Configuration space RRT-Connect |

| 6 | 511 | 2D RRT-Star |

Project Submission and Regrading

Git repositories will be used for project implementation, version control, and submission for grading. The implementation of your project is submitted through an update to the master branch of your designated repository. The course staff will support repositories that use main as their default git branch to the best of our ability. Updates to the master branch must be committed and pushed prior to the due date for each assignment for any consideration of full credit. Your implementation will be checked out and executed by the course staff. Through your repository, you will be notified by the course staff whether your implementation of assignment features is sufficient to receive credit.

Continuous Integration Project Grading

For the Winter 2024 semester, AutoRob will make use of "continuous integration grading" for student project implementations. Grading for a particular project will occur once 3 days before the project deadline, and then continuously after the project deadline.

The "CI grader" will automatically pull code from your repository, run tests for all assignments that are due to the current time, and push the results of grading back to your repository. Please remember to not break the functionality of project features that are already working in your code. The CI grader will run at regularly scheduled intervals each day. The CI grader is new aspect of the AutoRob course, as an innovation for scaling the course. Thus, grades automatically generated by the CI grader will be considered tentative and reviewable by the course staff. Your feedback, understanding, and help to improve the CI grader will be greatly appreciated by the course staff.

Late Policy

Do not submit assignments late. The course staff reserves the right to not grade late submissions. The course instructor reserves the right to decline late submissions and/or adjust partial credit on regraded assignments.

If granted by the course instructor, late submissions can be graded for partial credit, with the following guidelines. Submissions pushed within two weeks past the project deadline will be graded for 80% credit. Submissions pushed within four weeks of the project deadline will be graded for 60% credit. Submissions pushed at any time before the semester project submission deadline (April 22, 2024) will be considered for 50% credit. As a reminder, the course instructor reserves the right to decline late submissions and/or adjust partial credit on regraded assignments.

Regrading Policy

The regrading policy allows for submission and regrading of projects up through the final grading of projects, which will be April 22 for the Winter 2024 Semester. This regrading policy will grant full credit for project submissions pushed to your repository before the corresponding project deadline. If a feature of a graded project is returned as not completed (or "DUE"), your code can be updated for consideration at 80% credit. This code update must be pushed to your repository within two weeks from when the originally graded project was returned. Regrades of projects updated beyond this two week window can receive at most 60% credit.

Completed Features Policy

All checked features must continue to function properly in your repository up through the final grading deadline (April 22, 2024). Checked features that do not function properly for subsequent projects will be treated as a new submission and subject to the regrading policy.

Final Grading

All grading will be finalized on April 22, 2024. Regrading of specific assignments can be done upon request during office hours. No regrading will be done after grades are finalized.

Repositories

You are expected to use kineval-stencil as a private git repository for your project work this course through the AutoRob Winter 2024 GitHub Classroom. Please do not use the public version of the kineval-stencil repository, which is provided only for the spirit of open source.

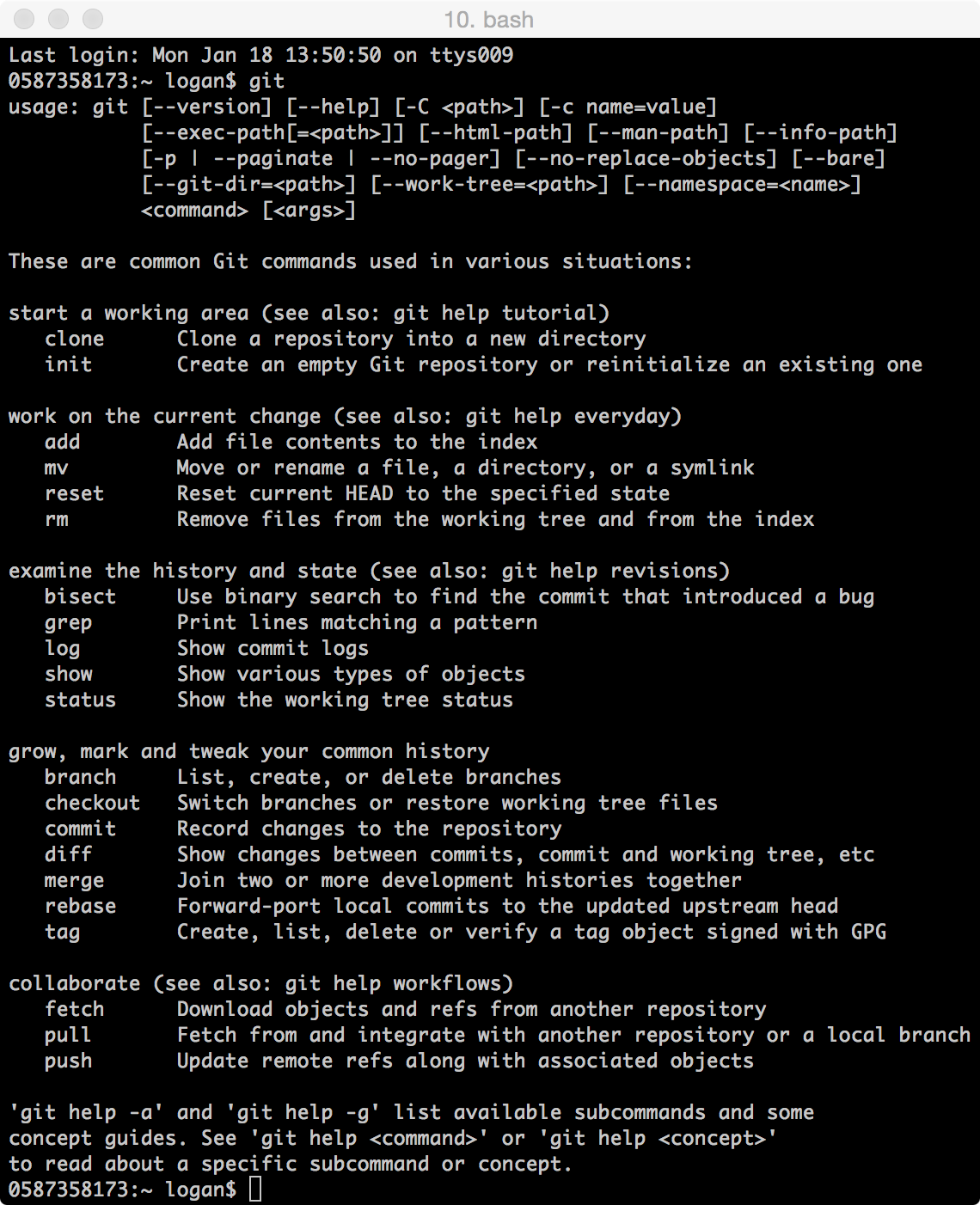

There are many different tutorials for learning how to use git repositories. For those new to version control, we realize git has a significant startup overhead and learning curve, but it is definitely worth the effort. The first laboratory discussion in AutoRob will be dedicated to installing and using git. The AutoRob course site also has its own basic quick start tutorial. The Pro Git book provides an in-depth introduction to git and version control. As different people often learn through different styles, the Git Magic tutorial has also proved quite useful when a different perspective is needed. git: the simple guide has often been a great and accessible quick start resource.

We expect students to use these repositories for collaborative development as well as project submission. It is the responsibility of each student group to ensure their repository adheres to the Collaboration Policy and submission standards for each assignment. Submission standards and examples will be described for each assignment as needed.

IMPORTANT: Do not modify the directory structure in the KinEval stencil. For example, the file "home.html" should appear in the top level of your repository. Repositories that do not follow this directory structure will not be graded.

Code Maintenance Policy and Branching

This section outlines expectations for maintenance of source code repositories used by students for submission of their work in this course. Repositories that do not maintain these standards will not be graded at the discretion of the course staff.

Code submitted for projects in this course must reside in the master branch (or main branch) of your repository. The directory structure provided in the KinEval code stencil must not be modified. For example, the file "home.html" should appear in the top level directory of your repository.

The master branch must always maintain a working (or stable) version of your code for this course. Code in the master branch can be analyzed at any time with respect to any assignment whose due date has passed. Improperly functioning code on the master branch can affect the grading of an assignment (even after the assignment due date) up to the assignment of final grades.

The master branch must always be in compliance with the Michigan Honor Code and Michigan Honor License, as described below in the course Collaboration Policy. To be considered for grading, a commit of code to your master branch must be signed with your name and the instructor name at the bottom of the file named LICENSE with an unmodified version of the Michigan Honor License. Without a properly asserted license file, a code commit to your repository will be considered an incomplete submission and will be ineligible for grading.

If advanced extension features have been implemented and are ready for grading, such features must be listed in the file "advanced_extensions.html" in the top level directory of the master branch with usage instructions. Advanced extension features not listed in this file may not be graded at the discretion of the course staff.

Branching

Students are encouraged to update their repository often with the help of branching. Branching spawns a copy of code in your master branch into a new branch for development, and then merging integrates these changes back into master once they are complete. For example, you can create an Assignment-2 branch for your work on the second project while it is under development and any changes may be experimental, which will keep your master branch stable for grading. Once you are confident in your implementation of the second project, you can merge your Assignment-2 branch back into the master branch. The master branch at this point will have working stable versions of the first and second projects, both of which will be eligible for grading. Similarly, an Assignment-3 branch can be created for the next project as you develop it, and then the Assignment-3 branch can be merged into the master branch when ready for grading. This configuration allows your work to be continually updated and built upon such that versions are tracked and grading interruptions are minimized.

Collaboration Policy

This collaboration policy covers all course material and assignments unless otherwise stated. All submitted assignments for this course must adhere to the Michigan Honor License (the 3-Clause BSD License plus two academic integrity clauses).

Course material, concepts, and documentation may be discussed with anyone. Discussion during quizzes is not allowed with anyone other than a member of the course staff. Assignments may be discussed with the other students at the conceptual level. Discussions may make use of a whiteboard or paper. Discussions with others (or people outside of your assigned project group) cannot include writing or debugging code on a computer or collaborative analysis of source code. You may take notes away from these discussions, provided these notes do not include any source code.

The code for your implementation may not be shown to anyone outside of your assigned project group, including granting access to repositories or careless lack of protection. For example, you do not need to hide the screen of your computer from anyone, but you should not attempt to show anyone your code. When you are done using any robot device such that another group may use it, you must remove all code you have put onto the device. You may not share your code with others outside of your group. At any time, you may show others the implemented program running on a device or simulator, but you may not discuss specific debugging details about your code while doing so.

This policy applies to collaboration during the current semester and any past or future instantiations of this course. Although course concepts are intended for general use, your implementation for this course must remain private after the completion of the course. It is expressly prohibited to share any code previously written and graded for this course with students currently enrolled in this course. Similarly, it is expressly prohibited for any students currently enrolled in this course to refer to any code previously written and graded for this course.

IMPORTANT: To acknowledge compliance with this collaboration policy, append your name to the file "LICENSE" in the main directory of your repository with the following text. This appending action is your attestation of your compliance with the Michigan Honor License and the Michigan Honor Code statement:

"I have neither given nor received unauthorized aid on this course project implementation, nor have I concealed any violations of the Honor Code."

This attestation of the honor code will be considered updated with the current date and time of each commit to your repository. Repository commits that do not include this attestation of the honor code will not be graded at the discretion of the course instructor.

Should you fail to abide by this collaboration policy, you will receive no credit for this course. The University of Michigan reserves the right to pursue any means necessary to ensure compliance. This includes, but is not limited to prosecution through The College of Engineering Honor Council, which can result in your suspension or expulsion from the University of Michigan. Please refer to the Engineering Honor Council for additional information.

Course Schedule (tentative and subject to change)

Previously recorded slides and lecture recordings are provided for asynchronous and optional parts of the course, as well as preview versions of lectures.

Slides from this course borrow from and are indebted to many sources from around the web. These sources include a number of excellent robotics courses at various universities.

Assignment 1: Path Planning

Due 11:59pm, Friday, January 26, 2024

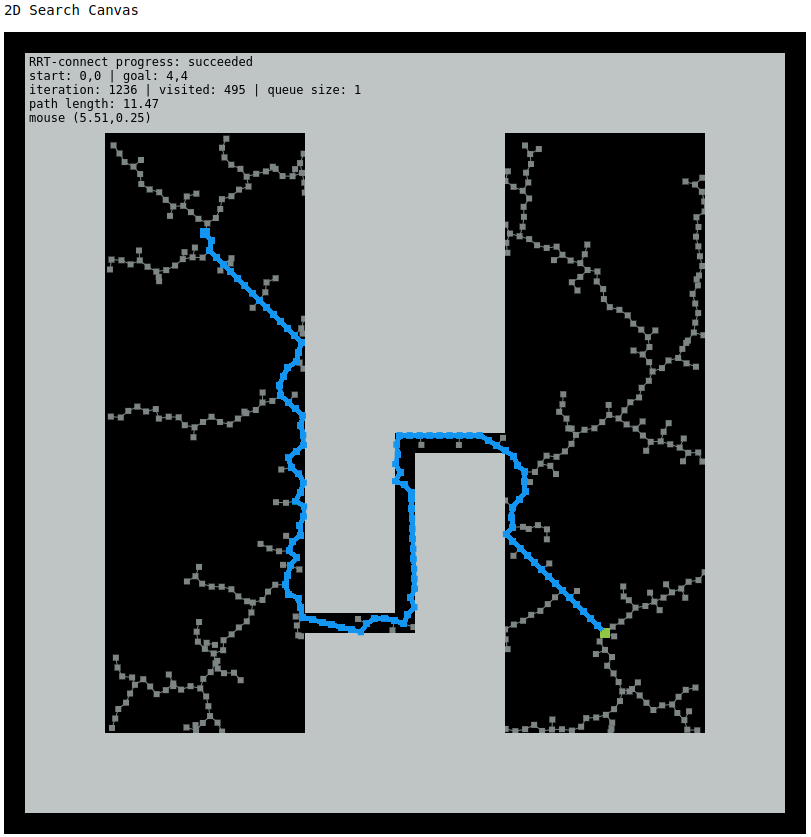

The objective of the first assignment is to implement a collision-free path planner in JavaScript/HTML5. Path planning is used to allow robots to autonomously navigate in environments from previously constructed maps. A path planner essentially finds a set of waypoints (or setpoints) for the robot to traverse and reach its goal location without collision. As covered in other courses (EECS 467, ROB 550, or ROB 530), such maps can be estimated through methods for simultaneous localization and mapping. Below is an example from EECS 467 where a robot performs autonomous navigation while simultaneously building an occupancy grid map:

For this assignment, you will implement the planning part of autonomous navigation as an A-star graph search algorithm. Unlike in the above video, where the map is built as the robot explores, you will be given a complete map of the robot's world to run A-star on. A-star infers the shortest path from a start to a goal location in an arbitrary 2D world with a known map (or collision geometry). This A-star implementation will consider locations in a uniformly spaced, 4-connected grid. A-star requires an admissible heuristic, which can be the Euclidean distance to the goal in your implementation. You will implement a heap data structure as a priority queue for visiting locations in the search grid.

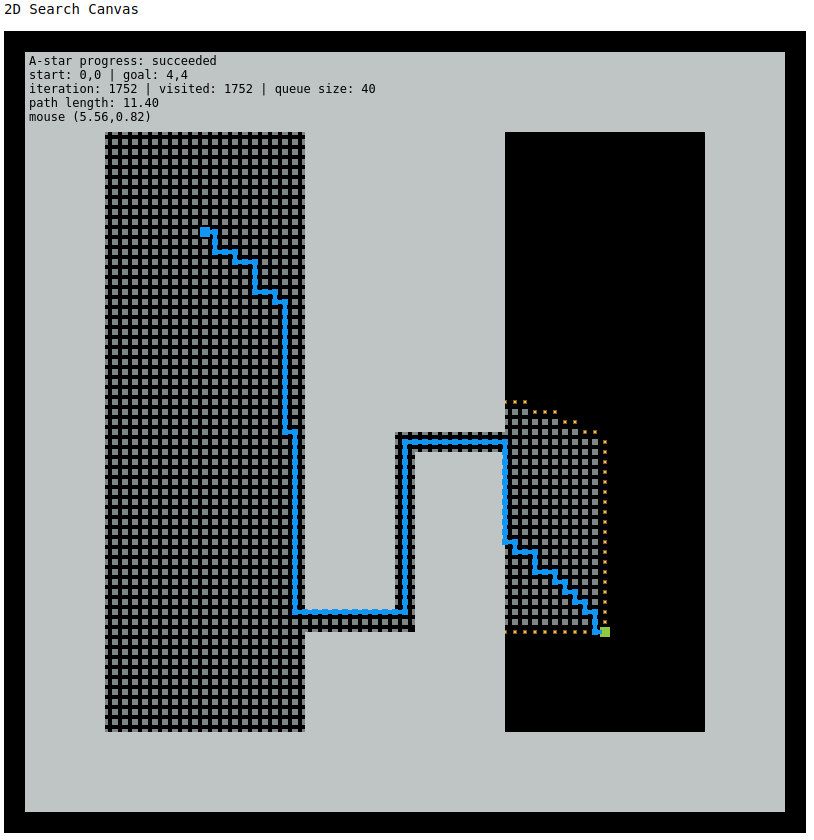

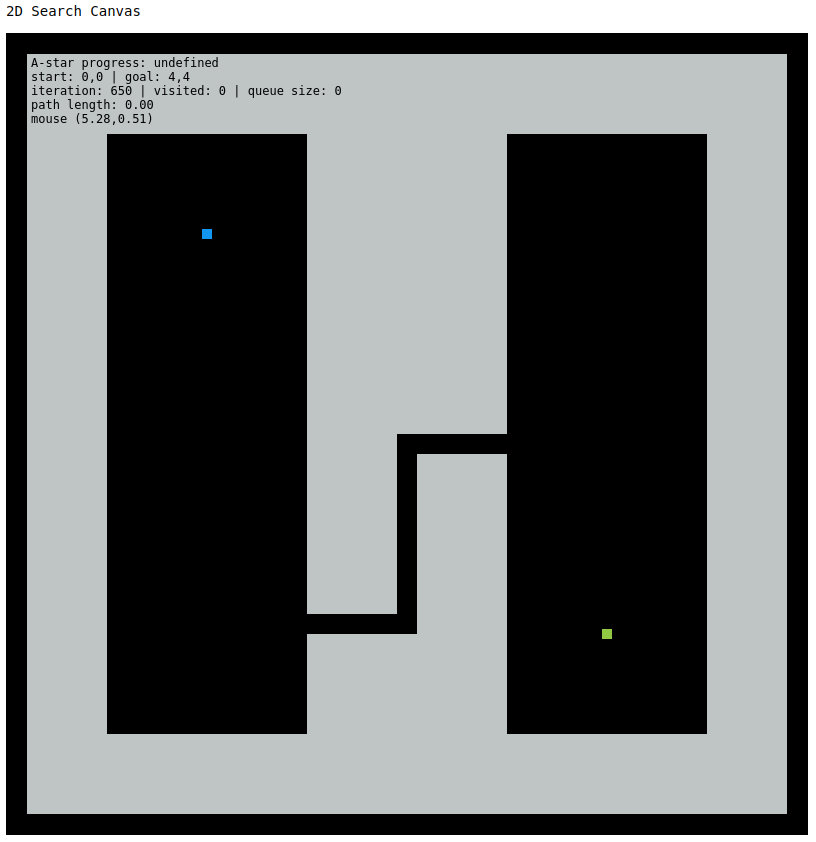

If properly implemented, the A-star algorithm should produce the following path (or path of similar length) using the provided code stencil:

Features Overview

This assignment requires the following features to be implemented in the corresponding files in your repository:

-

Heap implementation in "tutorial_heapsort/heap.js"

-

A-star search in "project_pathplan/graph_search.js"

Points distributions for these features can be found in the project rubric section. More details about each of these features and the implementation process are given below.

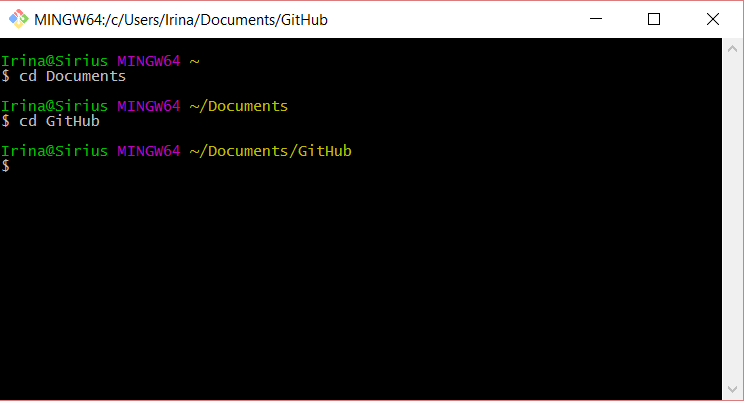

Cloning the Stencil Repository

If you have not done so already, the first step for completing this project (and all projects for AutoRob) is to clone the KinEval stencil repository. The appended git quick start below is provided those unfamiliar with git to perform this clone operation, as well as commiting and pushing updates for project submission. IMPORTANT: the stencil repository should be cloned and not forked -- and we will this single repository for all projects in the course.

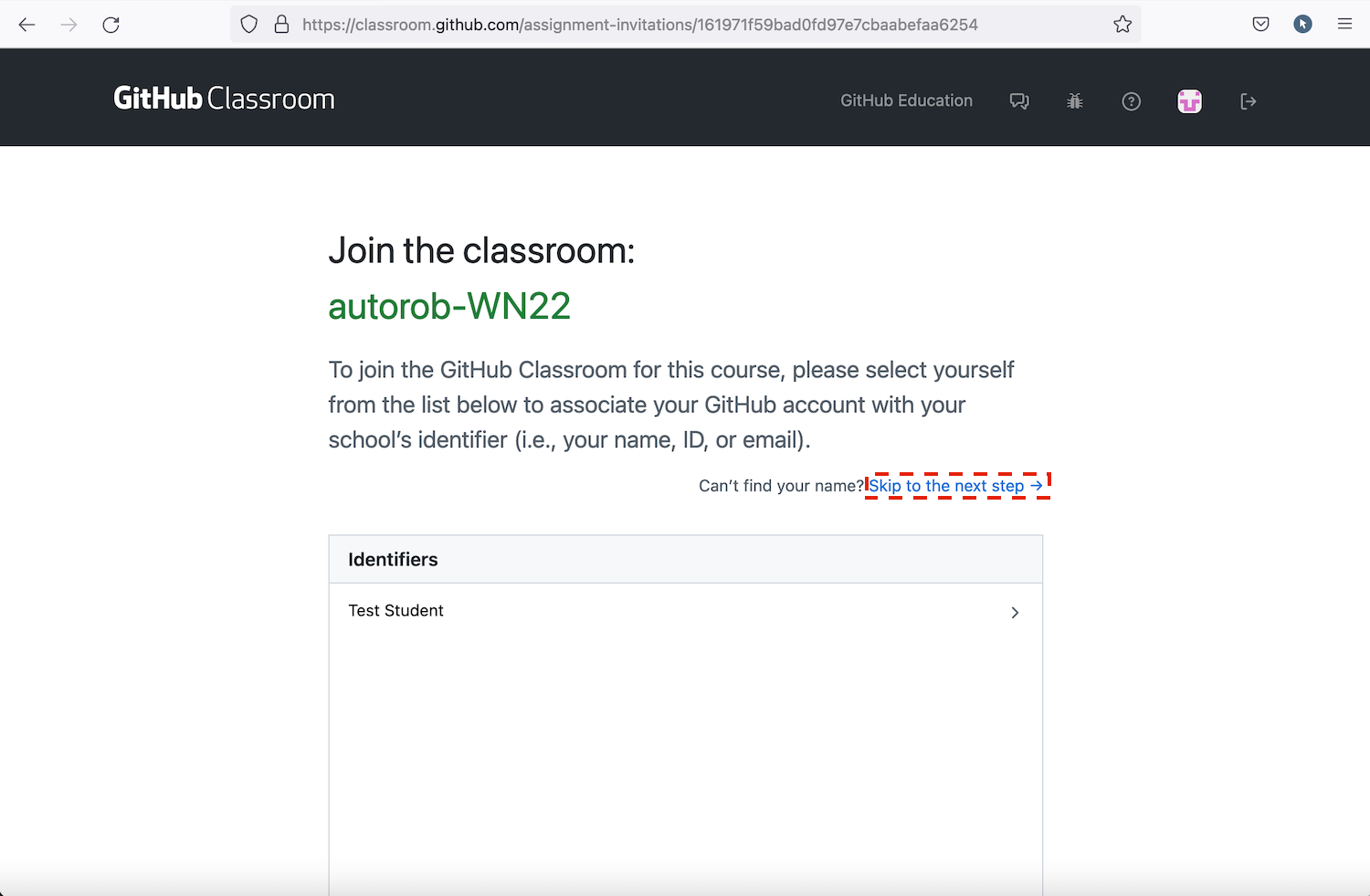

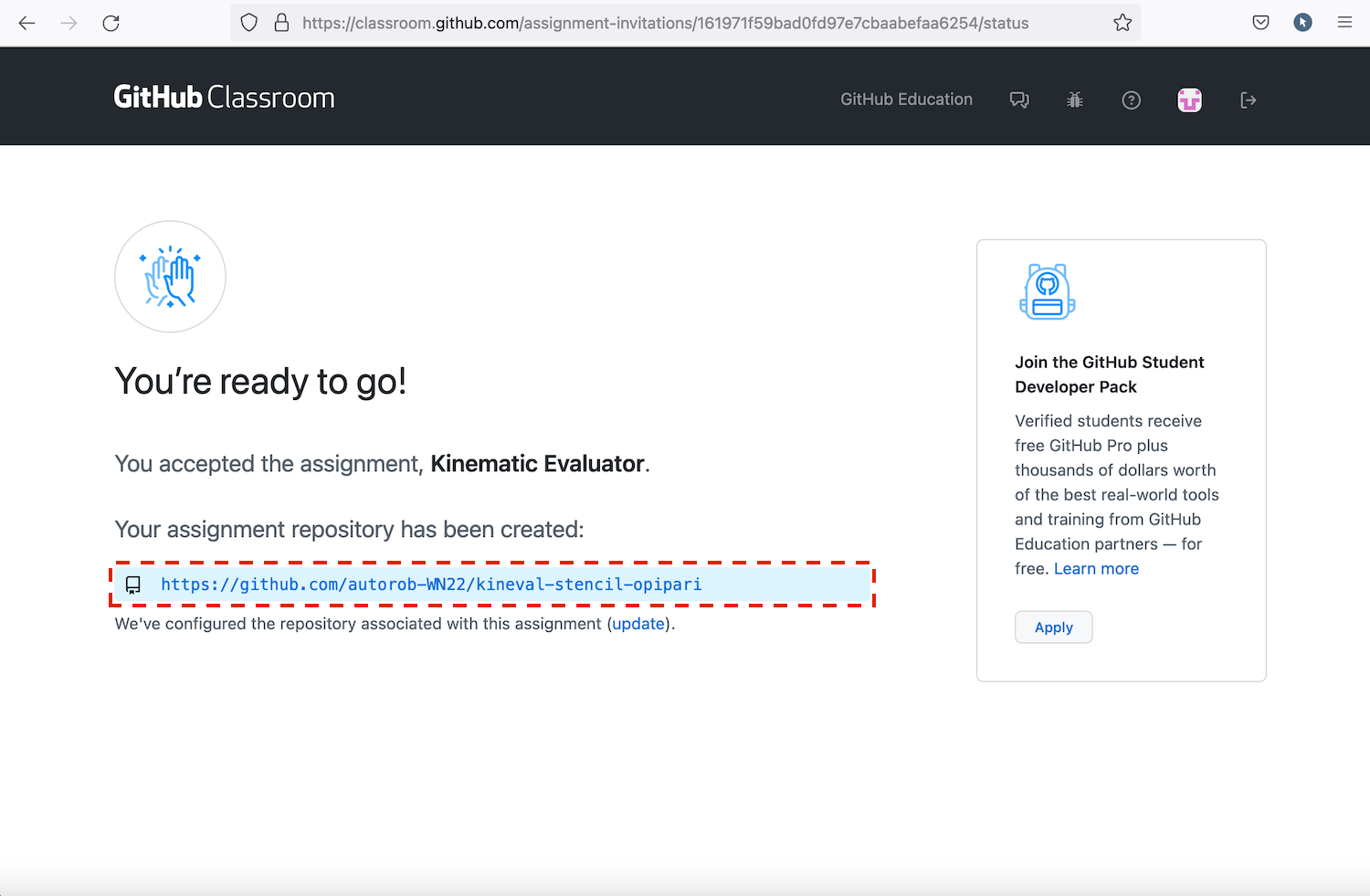

Please join our autorob-WN24 GitHub Classroom. By following the preceeding link, your GitHub account will be linked to our 'classroom' and a private clone of the KinEval stencil repository will be created for you to use. Your private repository will automatically be named kineval-stencil-<username>.

Throughout the KinEval code stencil, there are markers with the string "STENCIL" for code that needs to be completed for course projects. For this assignment, you will write code where indicated by the "STENCIL" marker in "tutorial_heapsort/heap.js" and "project_pathplan/graph_search.js".

Heap Sort Tutorial

The starting point for this assignment is to complete the heap sort implementation in the "tutorial_heapsort" subdirectory of the stencil repository. In this directory, a code stencil in JavaScript/HTML5 is provided in two files: "heapsort.html" and "heap.js". Comments are provided throughout these files to describe the structure of JavaScript/HTML5 and its programmatic features.

If you are new to JavaScript/HTML5, there are other tutorial-by-example files in the "tutorial_js" directory. Any of these files can be run by simply opening them in a web browser. Note that these are examples only, and there are no assignment requirements in the "tutorial_js" files.

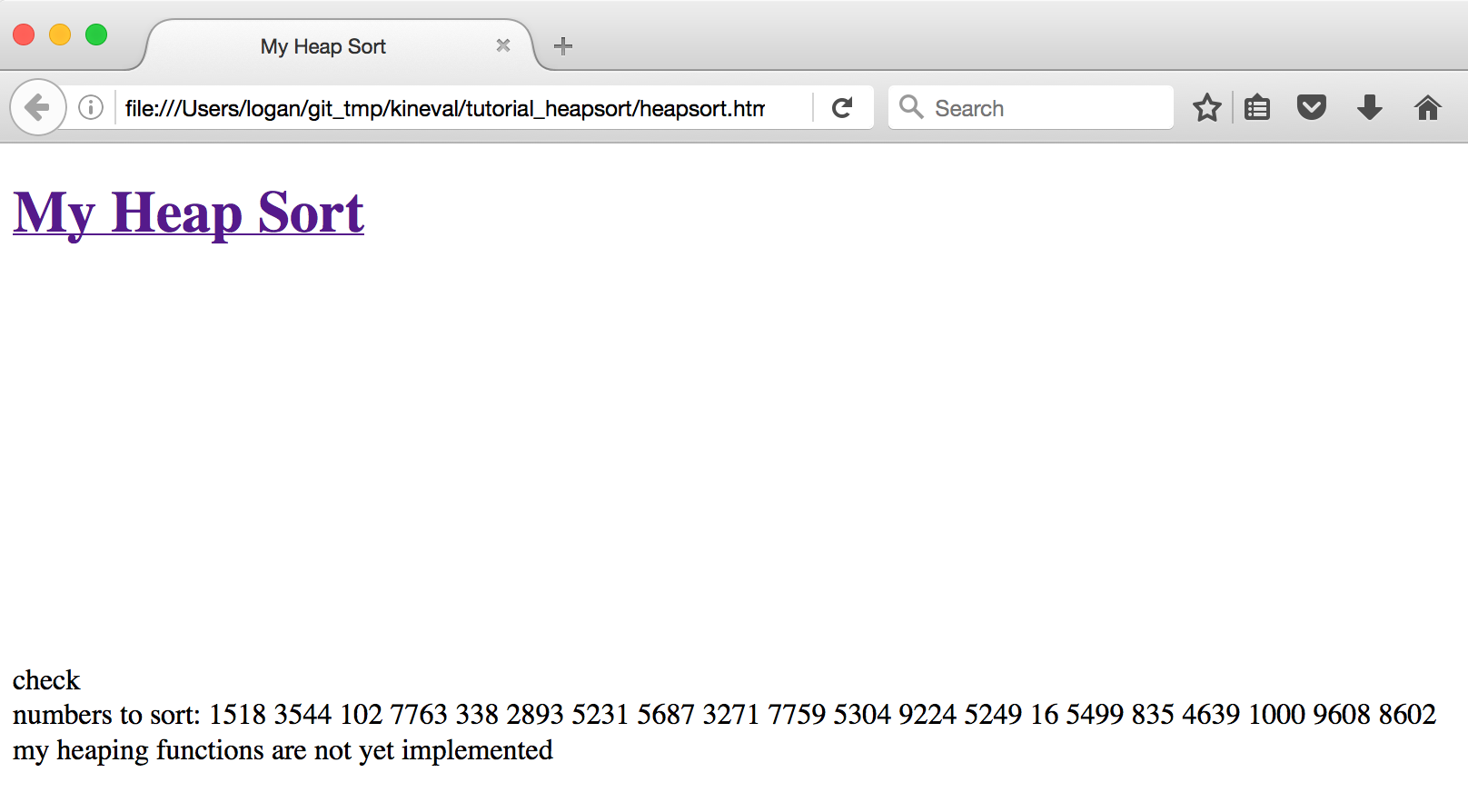

Opening "heapsort.html" will show the result of running the incomplete heap sort implementation provided by the code stencil:

To complete the heap sort implementation, complete the heap implementation in "heap.js" at the locations marked "STENCIL". In addition, the inclusion of "heap.js" in the execution of the heap sort will require modification of "heapsort.html".

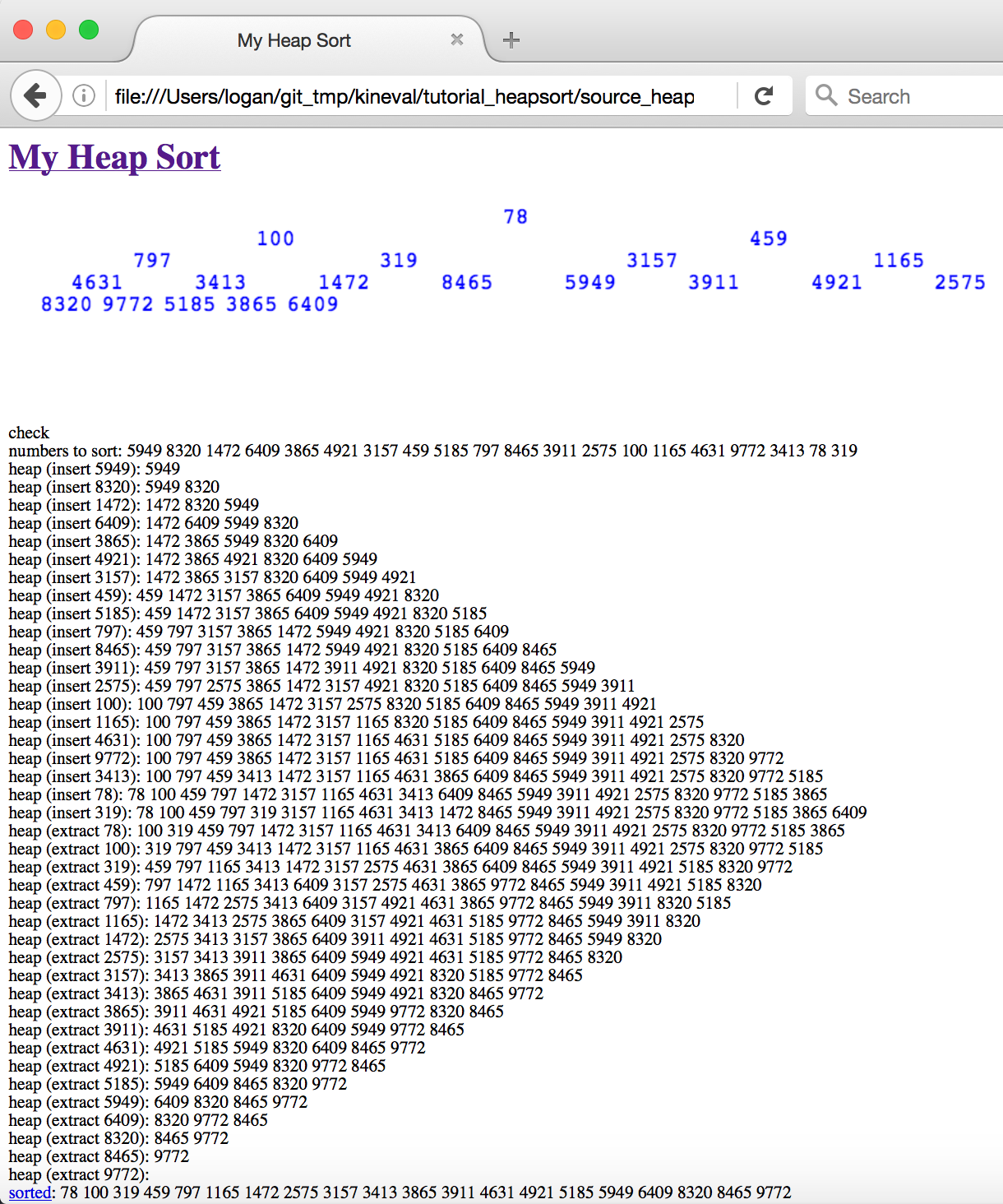

A successful heap sort implementation will show the following result for a randomly generated set of numbers:

Graph Search Stencil

For the path planning implementation, a JavaScript/HTML5 code stencil has been provided in the "project_pathplan" subdirectory. The main HTML file, "search_canvas.html", includes JavaScript code from "draw.js", "infrastructure.js", "graph_search.js", and the "/scenes" directory. Of these files, students must only edit "graph_search.js", although you may want to examine the other files to understand the available helper functions. There will also be an optional activity involving adding new planning scene files under the "/scenes" directory. Opening "search_canvas.html" in a browser should display an empty 2D world displayed in an HTML5 canvas element.

There are five provided planning scenes under the "/scenes" directory within this code stencil: "empty.js", "misc.js", "narrow1.js", "narrow2.js", and "three_sections.js". The choice of planning_scene can be specified from the URL given to the browser, as described in comments in "search_canvas.html". For example, the URL "search_canvas.html?planning_scene=scenes/narrow2.js" will bring up the "narrow2.js" planning world shown above. Other execution parameters, such as start and goal location, can also be specified through the document URL. A description of these parameters is also provided in "search_canvas.html".

This code stencil is implemented to perform graph search iterations interactively in the browser. The core of the search implementation is performed by the function iterateGraphSearch(). This function performs a graph search iteration for a single location in the A-star execution. The browser implementation cannot use a while loop over search iterations, as in the common A-star implementation. Such a while loop would keep control within the search function, and cause the browser to become non-responsive. Instead, the iterateGraphSearch() gives control back to the main animate() function, which is responsible for updating the display and user interaction.

Within the code stencil, you will complete the functions initSearchGraph() and iterateGraphSearch() as well as add functions for heap operations. Locations in "graph_search.js" where code should be added are labeled with the "STENCIL" string.

The initSearchGraph() function creates a 2D array over graph cells to be searched. Each element of this array contains various properties computed by the search algorithm for a particular graph cell. Remember, a graph cell represents a square region of space in the 2D planning scene. The size of each cell is specified by the "eps" parameter, as the lengths of the square sides. initSearchGraph() must determine the start node for accessing the planning graph from the start pose of the robot, specified as a 2D vector in parameter "q_init". The visit queue is initialized as this start node.

The iterateGraphSearch() function should perform a search iteration towards the goal pose of the robot, specified as a 2D vector in parameter "q_goal". The search must find a goal node that allows for departure from the planning graph without collision. iterateGraphSearch() makes use of three provided helper functions. testCollision([x, y]) returns a boolean of whether a given 2D location, as a two-element vector [x, y], is in collision with the planning scene. draw_2D_configuration([x, y], type) draws a square at a given location in the planning world to indicate that location has been visited by the search (type = "visited") or is currently in the planning queue (type = "queued"). Incrementing the "search_visited" global variable will update the number of nodes visited displayed on the page. Once the search is complete, drawHighlightedPathGraph(l) will render the path produced by the search algorithm between location l and the start location. The global variable search_iterate should be set to false when the search is complete to end animation loop.

Graduate Section Requirement

In addition to the A-star algorithm, students in the graduate section of AutoRob must additionally implement path planning by Depth-first search, Breadth-first search, and Greedy best-first search. An additional report is required in "report.html" (you will need to create this file) in the "project_pathplan" directory. This report must: 1) show results from executing every search algorithm with every planning world for various start and goal configurations and 2) synthesize these results into coherent findings about these experiments.

For effective communication, it is recommended to think of "report.html" like a short research paper: motivate the problem, set the value proposition for solving the problem, describe how your methods can address the problem, and show results that demonstrate how well these methods realize the value proposition. Visuals are highly recommended to complement this description. The best research papers can be read in three ways: once in text, once in figures, and once in equations. It is also incredibly important to remember that writing in research is about generalizable understanding of the problem more than a specific technical accomplishment.

Advanced Extensions

Advanced extensions can be submitted anytime before the final grading is complete. Concepts for several of these extensions will not be covered until later in the semester. Any new path planning algorithm must be implemented within its own ".js" file under the "project_pathplan" directory, and invoked through a parameter given through the URL. For example, the Bug0 algorithm must be invoked by adding the argument "?search_alg="Bug0" to the URL. Thus, a valid invocation of Bug0 for the Narrow2 world could use the URL (for the appropriate location of the file on your computer's filesystem):

file:///myfilesystem/kineval-stencil/project_pathplan/search_canvas.html?planning_scene=scenes/narrow2.js?search_alg="Bug0

The same format must be used to invoke any other algorithm (such as Bug1, Bug2, TangentBug, Wavefront, etc.). Note that you will need to update the animate loop in draw.js to include new planning algorithms and update the main HTML file to include your new scripts, along with implementing the algorithms in their own files.

Of the possible advanced extension points, two additional points for this assignment can be earned by implementing the "Bug0", "Bug1", "Bug2", and "TangentBug" navigation algorithms. The implementation of these bug algorithms must be contained within the file "bug.js" under the "project_pathplan" directory.

Of the possible advanced extension points, two additional points for this assignment can be earned by implementing navigation by "Potential" fields and navigation using the "Wavefront" algorithm. The implementation of these potential-based navigation algorithms must be contained within the file "field_wave.js" under the "project_pathplan" directory.

Of the possible advanced extension points, one additional point for this assignment can be earned by implementing a navigation algorithm using a probabilistic roadmap ("PRM"). This roadmap algorithm implementation must be contained within the file "prm.js" under the "project_pathplan" directory.

Of the possible advanced extension points, one additional point for this assignment can be earned by implementing costmap functionality using morphological operators. Based on the computed costmap, the navigation routine would provide path cost in addition path length for a successful search. The implementation of this costmap must be contained within the file "costmap.js" under the "project_pathplan" directory.

Of the possible advanced extension points, one additional point for this assignment can be earned by implementing a priority queue through an Fibonacci Heap. The implementation of this priority queue must be contained within the file "fibonacci_heap.js" under the "project_pathplan" directory.

Of the possible advanced extension points, one additional point for this assignment can be earned by adapting the search canvas to plan betwen any locations in the map "bbb2ndfloormap.png" (provided in the stencil repository) when the "planning_scene" parameter is invoked as "BeysterFloor2".

NEW -- Of the possible advance extensions points, four additional points for this assignment can be earned by implementing valid game logic for a snake game in the code stencil and autonomously controlling the snake using the A-star algorithm. An example of autonomous snake game pathfinding is described in this video.

Project Submission

For turning in your assignment, ensure your completed project code has been committed and pushed to the master branch of your repository.

If you are paying attention, you should also add a directory to your repository called "me". This "me" directory should include a simple webpage in the file "me.html". The "me.html" file should have a title with your name, an h1 tag with your name, body with a brief introduction about you, and a script tag that prints the result of Array(16).join("wat"-1)+" Batman!" to the console.

Assignment 2: Pendularm

Due 11:59pm, Friday, February 9, 2024

Physical simulation is widely used across robotics to test robot controllers as well as generate training data for learned controllers. Testing and training in simulation has many benefits, such as avoiding the risk of damaging a (likely expensive) robot and faster development of controllers. The video below about the NVIDIA Isaac Sim highlights many of the advantages and advancements towards narrowing the sim-to-real gap. Simulation also allows for consideration of environments not readily available for testing, such as for interplanetary exploration. We will now model and control our first robot, the Pendularm, to achieve an arbitrary desired setpoint state.

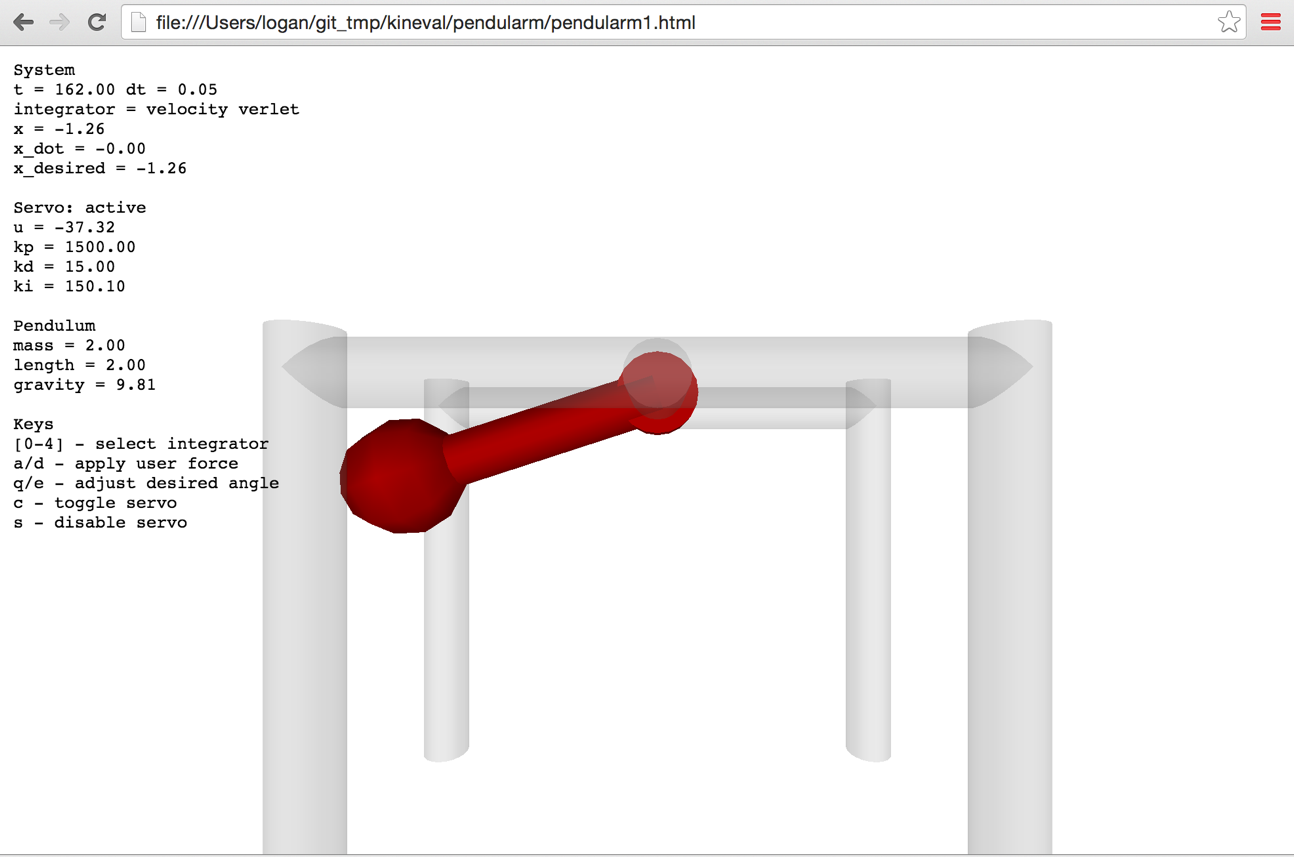

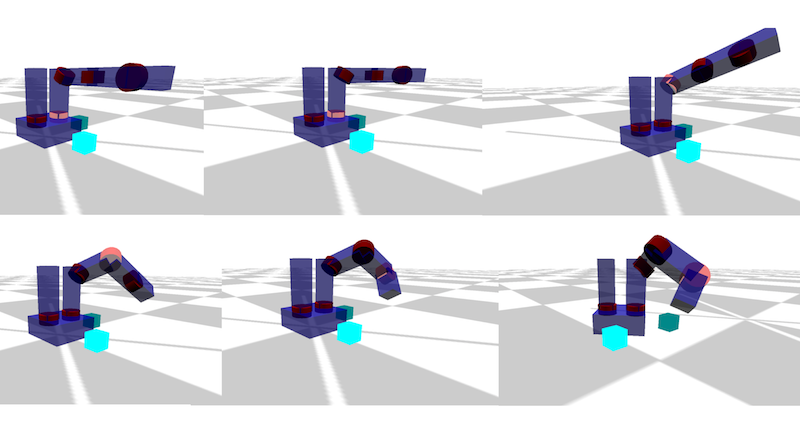

As an introduction to building your own robot simulator, your task is to implement a physical dynamics and servo controller for a simple 1 degree-of-freedom robot system. This system is 1 DOF robot arm as a frictionless simple pendulum with a rigid massless rod and idealized motor. A visualization of the Pendularm system is shown below. Students in the graduate section will extend this system into a 2-link 2-DOF robot arm, as an actuated double pendulum.

Features Overview

This assignment requires the following features to be implemented in the corresponding files in your repository:

-

Euler integrator in "project_pendularm/update_pendulum_state.js"

-

Velocity Verlet integrator in "project_pendularm/update_pendulum_state.js"

-

PID controller in "project_pendularm/update_pendulum_state.js"

-

[Grad section only] Verlet integrator in "project_pendularm/update_pendulum_state.js"

-

[Grad section only] Runge-Kutta 4 integrator in "project_pendularm/update_pendulum_state.js"

-

[Grad section only] Double pendulum implementation in "project_pendularm/update_pendulum_state2.js"

Points distributions for these features can be found in the project rubric section. More details about each of these features and the implementation process are given below.

Implementation Instructions

The code stencil for the Pendularm assignment is available within the "project_pendularm" subdirectory of KinEval.

For physical simulation, you will implement several numerical integrators for a pendulum with parameters specified in the code stencil. The numerical integrator will advance the state of the pendulum (angle and velocity) in time given the current acceleration, which your pendulum_acceleration function should compute using the pendulum equation of motion. Your code should update the angle and velocity in the pendulum object (pendulum.angle and pendulum.angle_dot) for the visualization to access. If implemented successfully, this ideal pendulum should oscillate about the vertical (where the angle is zero) and with an amplitude that preserves the initial height of the pendulum bob.

Students enrolled in the undergraduate section will implement numerical integrators for:

For motion control, students in both undergraduate sections will implement a proportional-integral-derivative controller to control the system's motor to a desired angle. This PID controller should output control forces integrated into the system's dynamics. You will need to tune the gains of the PID controller for stable and timely motion to the desired angle for a pendulum with parameters: length=2.0, mass=2.0, gravity=9.81. These default values are also provided directly in the init() function.

For user input, you should be able to:

select the choice of integrator using the [0-4] keys (with the "none" integrator as a default),

toggle the invocation of the servo controller with the 'c' or 'x' key (which is off by default),

decrement and increment the desired angle of the 1 DOF servoed robot arm using the 'q' and 'e' keys, and

(for the double pendulum) decrement and increment the desired angle of the second joint of the arm using the 'w' and 'r' keys, and

momentarily disable the servo controller with 's' key (and allowing the arm to swing uncontrolled).

Graduate Section Requirement

Students enrolled in the graduate section will implement numerical integrators for:

to simulate and control a single pendulum (in "update_pendulum_state.js"). Then, students in the graduate section will implement one of the above integrators for a double pendulum (in "update_pendulum_state2.js"). Any of the integrators may work as your choice for the double pendulum implementation, although the Runge-Kutta integrator is recommended. The double pendulum is allowed to have a smaller timestep than the single pendulum, within reasonable limits. A working visualization for the double pendularm will look similar to this result video by mamantov:

Advanced Extensions

Of the possible advanced extension points, one additional point for this assignment can be earned by generating a random desired setpoint state and using PID control to your Pendularm to this setpoint. This code must randomly generate a new desired setpoint and resume PID control once the current setpoint is achieved. A setpoint is considered achieved if the current state matches the desired state up to 0.01 radians for 2 seconds. The number of setpoints that can be achieved in 60 seconds must be maintained and reported in the user interface. The invocation of this setpoint trial must be enabled a user pressing the "t" key in the user interface.

Of the possible advanced extension points, two additional points for this assignment can be earned by implementing a simulation of a planar cart pole system. This cartpole system should have joint limits on its prismatic joint and no motor forces applied to the rotational joint. This cart pole implementation should be contained within the file "cartpole.html" under the "project_pendularm" directory.

Of the possible optional extension points, two additional points for this assignment can be earned by implementing a single pendulum simulator in maximal coordinates with a spring constraint enforced by Gauss-Seidel optimization. This maximal coordinate pendulum implementation should be contained within the file "pendularm1_maximal.html" under the "project_pendularm" directory. An additional point can be earned by extending this implementation to a cloth simulator in the file "cloth_pointmass.html".

Of the possible advanced extension points, three additional points for this assignment can be earned by developing a Newton-Euler simulation for a single rigid body with a cube geometry without consideration of contact with other objects. This maximal coordinate pendulum implementation should be contained within the file "rigid_body_sim.html" under the "project_pendularm" directory.

Of the possible advanced extension points, three additional points for this assignment can be earned by developing and implementing a maximal coordinate dynamical simulation of biped hopper with links as planar 2D rigid bodies capable of locomotion on a flat ground plane. This maximal coordinate pendulum implementation should be contained within the file "hopper_planar.html" under the "project_pendularm" directory.

Of the possible optional extension points, one additional point for this assignment can be earned by implementing a plot visualization of the state and desired setpoint for the 1 DoF pendulum over a 20 second window (of simulation time) within the pendularm1.html user interface. The Pendularm user interface must maintain at least the same usability as the provided pendularm1.html implementation.

Of the possible optional extension points, two additional points for this assignment can be earned by implementing a method for model predictive control within pendularm1.html for setpoint control.

Project Submission

For turning in your assignment, push your updated code to the master branch in your repository.

Optional: Pendularm Setpoint Competition

Details will be provided for participation in the Pendularm Setpoint Competition. Three additional points towards final grading will be awarded to the top performer in the Pendularm Setpoint Competition in each of the graduate and undergraduate sections. One additional point will be granted to the second and third place performers in each of the graduate and undergradate sections.

Assignment 3: Forward Kinematics

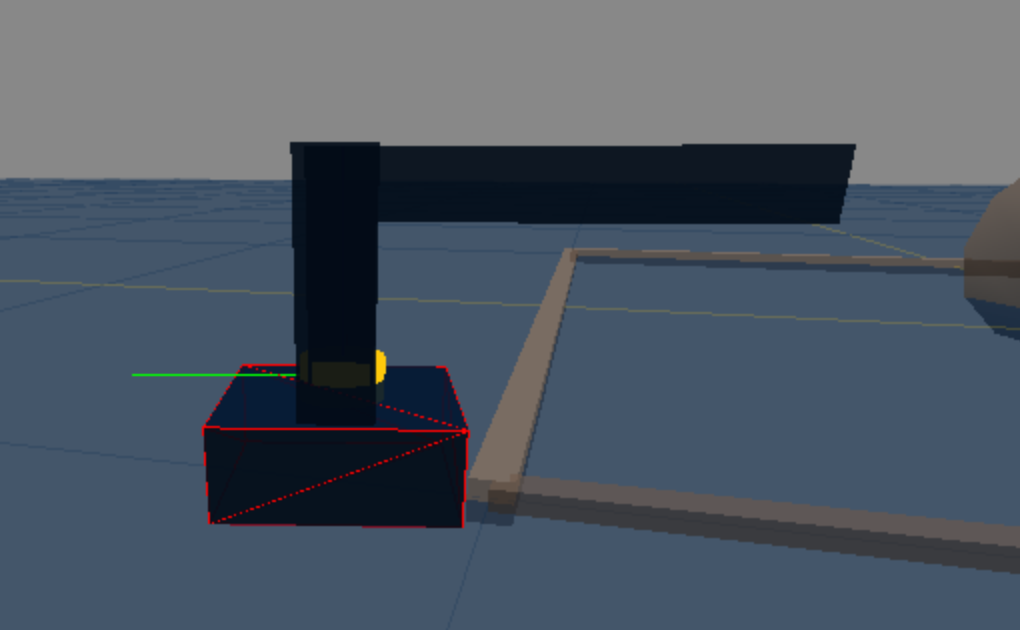

Due 11:59pm, Friday, Feb 23, 2024Forward kinematics (FK) forms the core of our ability to purposefully control the motion of a robot arm. FK will provide us a general formulation for controlling any robot arm to reach a desired configuration and execute a desired trajectory. Specifically, FK allows us to predict the spatial layout of the robot in our 3D world given a configuration of its joints. For the purposes of grasping and dexterous tasks, FK gives us the critical ability to predict the location of the robot's gripper (also known as its "endeffector"). As shown in our IROS 2017 video below, such manipulation assumes a robot has already perceived its environment as a scene estimate of objects and their positions and orientations. Given this scene estimate, a robot controller uses FK to evaluate and execute viable endeffector trajectories for grasping and manipulating an object.

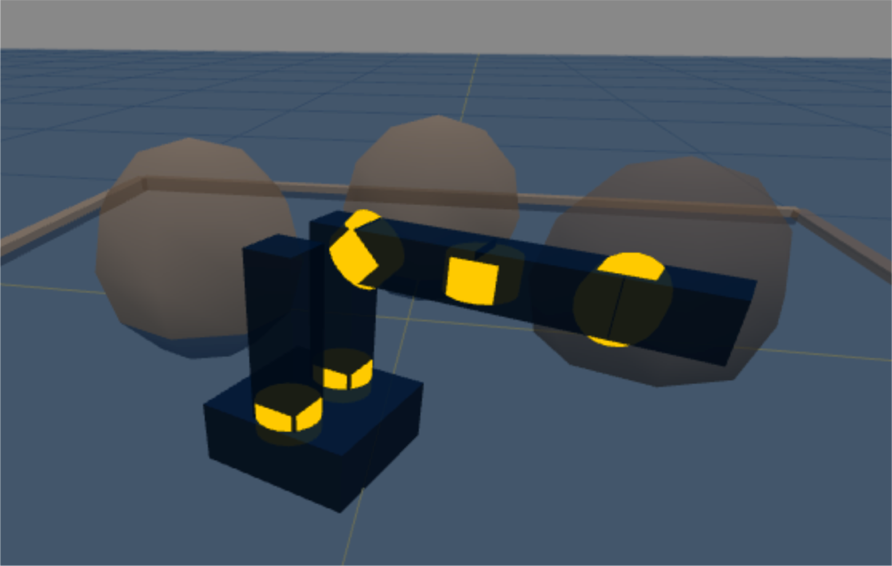

In this assignment, you will render the forward kinematics of an arbitrary robot, given an arbitrary kinematic specification. A collection of specifications for various robots is provided in the "robots" subdirectory of the KinEval code stencil. These robots include the Rethink Robotics' Baxter and Sawyer robots, the Fetch mobile manipulator, and a variety of example test robots, as shown in the "Desired Results" section below. To render the robot properly, you will compute matrix coordinate frame transforms for each link and joint of the robot based on the parameters of its hierarchy of joint configurations. The computation of the matrix transform for each joint and link will allow KinEval's rendering support routines to properly display the full robot. We will assume the joints will remain in their zero position, saving joint motion for the next assignment.

Features Overview

This assignment requires the following features to be implemented in the corresponding files in your repository:

-

[Optional, recommended] Fill in stencils for Just Starting Mode in "kineval/kineval_startingpoint.js"

-

Core matrix routines in "kineval/kineval_matrix.js"

-

FK transforms in "kineval/kineval_forward_kinematics.js"

-

Joint selection/rendering, based on the kinematic hierarchy in "kineval/kineval_robot_init_joints.js"

-

New robot definition in your own file in the "robots" directory

Points distributions for these features can be found in the project rubric section. More details about each of these features and the implementation process are given below.

Just Starting Mode

While previous assignments were implemented within self-contained subsections of the kineval_stencil repository, with this project, you will start working with the KinEval part of the stencil repository that also supports all future projects in the course. This KinEval stencil allows for developing the core of a modeling and control computation stack (forward kinematics, inverse kinematics, and motion planning) in a modular fashion.

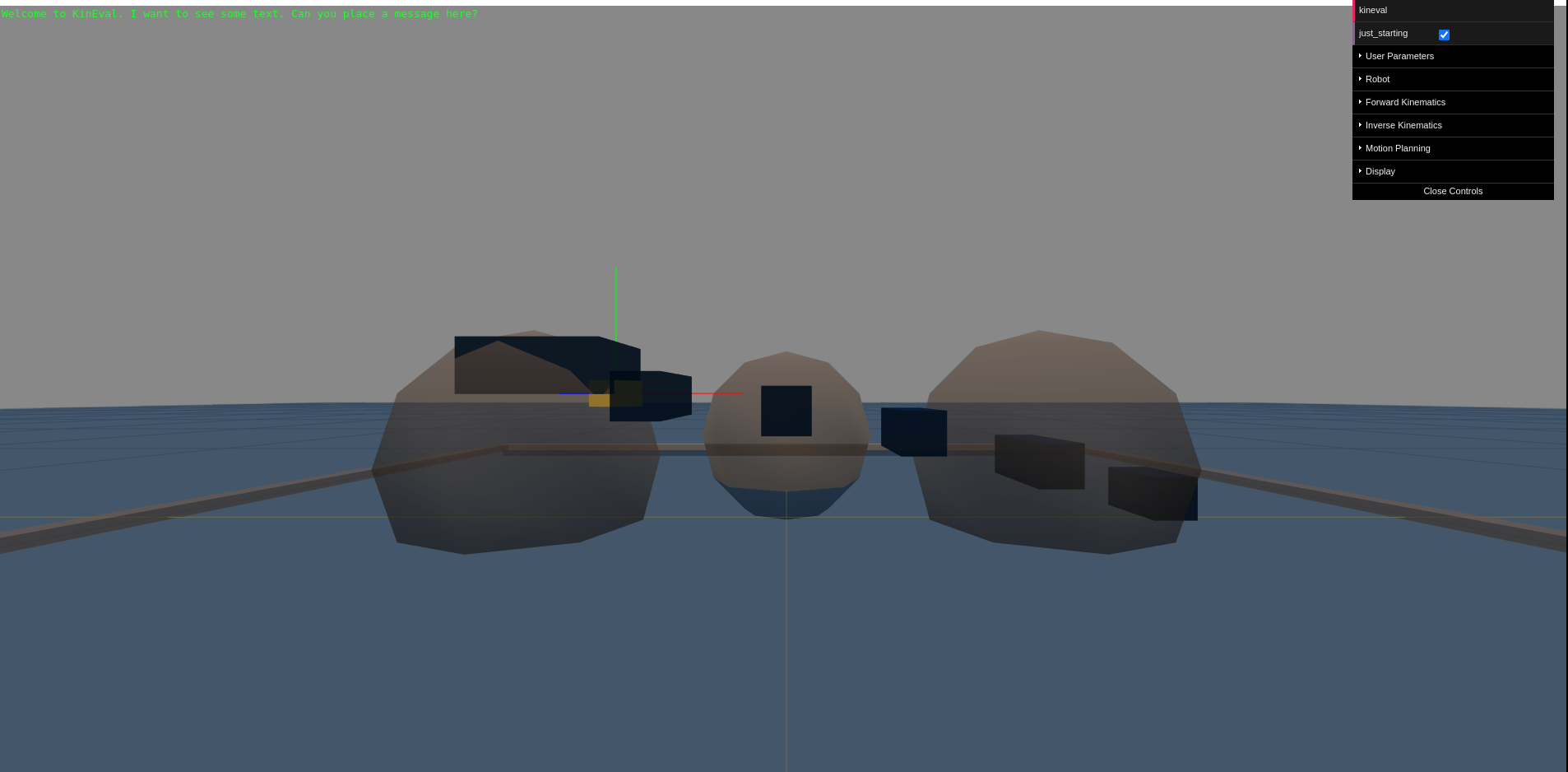

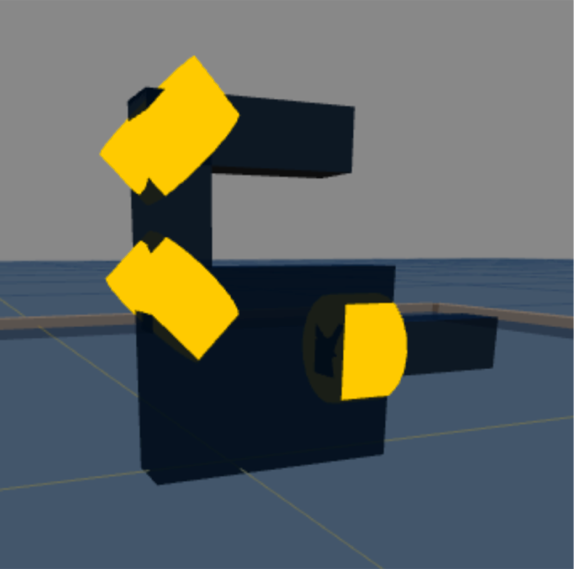

If you open "home.html" in this repository, you should see the disconnected pieces of a robot bouncing up and down in the default environment. This initial mode is the "starting point" state of the stencil to help build familiarity with JavaScript/HTML5 and KinEval.

Your (optional) first task is to make the bouncing robot in starting point mode responsive to keyboard commands. Specifically, the robot pieces will move upward, stop/start jittering, move closer together, and further apart (although more is encouraged). To do this, you will modify "kineval/kineval_startingpoint.js" at the sections marked with "STENCIL". These sections also include code examples meant to be a quick (and very rough) introduction to JavaScript and homogeneous transforms for translation, assuming programming competency in another language.

Brief KinEval Stencil Overview

Within the KinEval stencil, the functions my_animate() and my_init() in "home.html" are the principal access points into the animation system. my_animate() is particularly important as it will direct the invocation of functions we develop throughout the AutoRob course. my_animate() and my_init() are called by the primary process that maintains the animation loop: kineval.animate() and kineval.init() within "kineval/kineval.js".

IMPORTANT: "kineval/kineval.js", kineval.animate(), kineval.init(), and any of the given robot descriptions should not be modified.

For Just Starting Mode, my_animate() will call startingPlaceholderAnimate() and startingPlaceholderInit(), defined in "kineval/kineval_startingpoint.js". startingPlaceholderInit() contains JavaScript tutorial-by-example code that initializes variables for this project. startingPlaceholderAnimate() contains keyboard handlers and code to update the positioning of each body of the robot. By modifying the proper variables at the locations labed "STENCIL", this code will update the transformation matrix for each geometry of the robot (stored in the ".xform" attribute) as a translation in the robot's world. The ".xform" transform for each robot geometry is then used by kineval.robotDraw() to have the browser render the robot parts in the appropriate locations.

Forward Kinematics Files

Assuming proper completion of Just Starting Mode, you are now ready for implementation of robot forward kinematics. The following files are included (within script tags) in "home.html". You will modify these files for implementing FK:

"kineval/kineval_robot_init_joints.js" for initializing your robot object based on a given description object; modification of this file is required to add parent and child references to each link

"kineval/kineval_forward_kinematics.js" for implementing (a recursive) traversal over joints and links to compute transforms; traversal of forward kinematics is invoked from kineval.robotForwardKinematics() within my_animate() in home.html

"kineval/kineval_matrix.js" for the implementation of your vector and matrix routines, such as for matrix multiplication, matrix generation, etc.

Core Matrix Routines

A good place to start with your FK implemetation is writing and testing the core matrix routines. "kineval/kineval_matrix.js" contains function stencils for all required linear algebra routines. You will need to uncomment and fill in all the functions provided in this file except matrix_pesudoinverse and matrix_invert_affine. You do not need to implement the pseudoinverse calculation for this assignment; you should leave the matrix_pseudoinverse function commented out. You can implement the affine inverse function, but it will not be used in or tested for this assignment.

It is good practice to test these functions before continuing with your FK implementation. Consider writing a collection of tests using example matrix and vector calculations from the lecture slides or other sources.

Robot Examples

Each file in the "robots" subdirectory contains code to create a robot data object. This data object is initialized with the kinematic description of a robot (as well as some meta information and rendering geometries). The kinematic description defines a hierarchical configuration of the robot's links and joints. This description is a subset of the Unified Robot Description Format (URDF) converted into JSON format. The basic features of URDF are described in this tutorial.

IMPORTANT (seriously): The given robot description files should NOT be modified. Code that requires modified robot description files will fail tests used for grading. You are welcomed and encouraged to create new robot description files for additional testing.

The selection of different robot descriptions can occur directly in the URL for "home.html". As a default, the "home.html" in the KinEval stencil assumes the "mr2" robot description in "robots/robot_mr2.js". Another robot description file can be selected directly in the URL by adding a robot parameter. This parameter is segmented by a question mark and sets the robot file pointer to a given file local location, relative to "home.html". For example, a URL with "home.html?robot=robots/robot_urdf_example.js" will use the URDF example description. Note that to see the selected robot model in your visualization, you will need to turn off Just Starting Mode and have your FK methods implemented; see the Invoking Forward Kinematics section for more details.

Initialization of Kinematic Hierarchy

In addition to the various existing initialization functions, you should extend the robot object to complete the kinematic hierarchy to specify the parent and children joints for each link. This modification should be made in the kineval.initRobotJoints() function in "kineval/kineval_robot_init_joints.js". The children array of a link should be defined for all links except the leaves of the kinematic tree, in which case the ".children" property should be left undefined. For the KinEval user controls to work properly, the children array should be named the ".children" property of the link.

Note: KinEval refers to links and joints as strings, not pointers, within the robot object. robot.joints (as well as robot.links) is an array of data objects that are indexed by strings. Each of these objects stores relevant fields of information about the joint, such as its transform (".xform"), parent (".parent") and child (".child") in the kinematic hierarchy, local transform information (".origin"), etc. As such, robot.joints['JointX'] refers to an object for a joint. In contrast, robot.joints['JointX'].child refers to a string ('LinkX'), that can then be used to reference a link object (as robot.links['LinkX']). Similarly, robot.links['LinkX'].parent refers to a joint as a string 'JointX' that can then then be used to reference a joint object in the robot.joints array.

Invoking Forward Kinematics

The function kineval.robotForwardKinematics() in "kineval/kineval_forward_kinematics.js" will be the main point of invocation for your FK implementation. This function will need to call kineval.buildFKTransforms(), which is a function you will add to this file. kineval.buildFKTransforms() will update matrix transforms for the frame of each link and joint with respect to the global world coordinates. The computed transform for each frame of the robot needs to be stored in the ".xform" field of each link or joint. For a given link named 'LinkX', this xform field can be accessed as robot.links['LinkX'].xform. For a given joint named 'JointX', this xform field can be accessed as robot.joints['JointX'].xform. Once kineval.robotForwardKinematics() completes, the updated transforms for each frame are used by the function kineval.robotDraw() in the support code to render the robot.

A matrix stack recursion can be used to compute these global frames, starting from the base of the robot (specified as a string in robot.base). This recursion should use the provided local translation and rotation parameters of each joint in relation to its parent link in its traversal of the hierarchy. For a given joint 'JointX', these translation and rotation parameters are stored in the robot object as robot.joints['JointX'].origin.xyz and robot.joints['JointX'].origin.rpy, respectively. The current global translation and rotation for the base of the robot (robot.base) in the world coordinate frame is stored in robot.origin.xyz and robot.origin.rpy, respectively.

To run your FK routine, you must toggle out of starting point mode. This toggle can be done interactively within the GUI menu or by setting kineval.params.just_starting to false. The code below in "home.html" controls starting point mode invocation, where a single line can be uncommented to use FK mode by default:

// set to starting point mode is true as default

// set to false once starting forward kinematics project

//kineval.params.just_starting = false;

if (kineval.params.just_starting == true) {

startingPlaceholderAnimate();

kineval.robotDraw();

return;

}

Note: The stencil in "kineval/kineval_forward_kinematics.js" states that the user interface reuqires "robot_heading" and "robot_lateral", but these are for Assignment 4. You do not need these variables for this assignment.

Desired Results

The "robots/robot_mr2.js" example should produce the following:

If implemented properly, the "robots/robot_urdf_example.js" example should produce the following rendering:

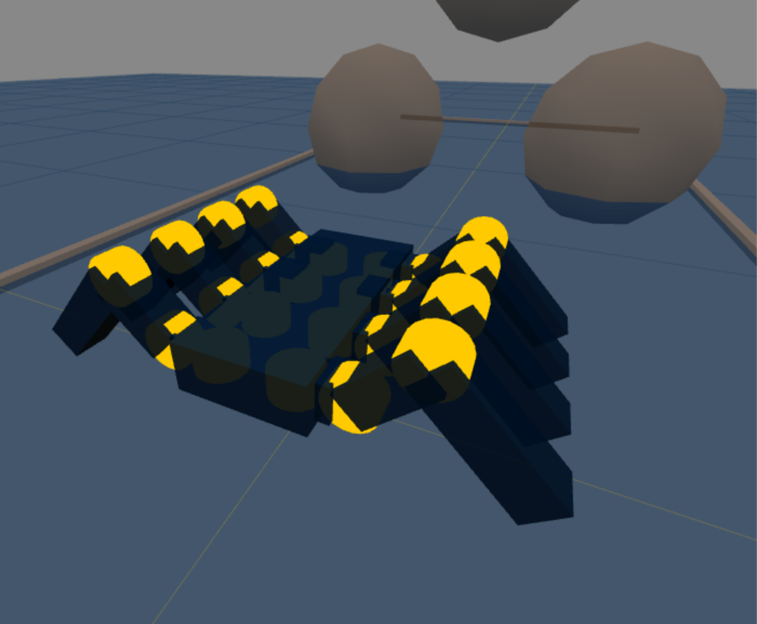

The "robots/robot_crawler.js" example should produce the following (shown with joint axes highlighted):

Interactive Hierarchy Traversal

Additionally, a correct implementation will be able to interactively traverse the kinematic hierarchically by changing the active joint. The active joint has focus for user control, which will be used in the next assignment. For now, we are using the active joint to ensure your kinematic hierarchy is correct. You should be able to move up and down the kinematic hierarchy with the "k" and "j" keys, respectively. You can also move between the children of a link using the "h" and "l" keys.

Orienting Joint Rendering Cylinders

The cylinders used as rendering geometries for joints are not aligned with joint axes by default. The support code in KinEval will properly orient joint rendering cylinders. To use this functionality, simply ensure that the vector_cross() function is correctly implemented in "kineval/kineval_matrix.js". vector_cross() will be automatically detected and used to properly orient each joint rendering cylinder.

New Robot Description

Students also must create a new robot description file that is compatible with their KinEval forward kinematics routines.

Students will create their new robot description by working as a pair with another student.

Each pair of students will submit their new robot description by presenting it to the class during the interactive session on March 6th for our robot showcase.

In addition, students should push their description file to their assignment repository in a new file titled "robots/new_robot_description.js" before the start of the interactive session.

Include the name or uniquename of your partner in a new "robot.partner_name" property of your robot description.

Of the two possible points for this feature, one point is earned by showcasing your description to the class and the second point is earned based on your description being compatible witht the KinEval forward kinematics routines.

If external geometries are imported (similar to the Fetch and Baxter), the robot description should be in a new subdirectory with the robot's name. The robot's name should also be used to name the URDF file, such as "robots/newrobotname/newrobotname.urdf.js". It is requested that geometries for a new robot go into this directory within a "meshes" subdirectory, such as "robots/newrobotname/meshes". Guidance can be provided during office hours about creating or converting URDF-based robot description files to KinEval-compliant JavaScript and importing Collada, STL, and Wavefront OBJ geometry files.

Graduate Section Requirement

Students in the AutoRob Graduate Section must: 1) implement the assignment as described above to work with all given examples, which includes the Fetch, Baxter, and Sawyer robot descriptions, and 2) create a new robot description that works with KinEval.

The files "robots/fetch/fetch.urdf.js", "robots/baxter/baxter.urdf.js", and "robots/sawyer/sawyer.urdf.js" contain the robot data object for the Fetch, Baxter, and Sawyer kinematic descriptions. The Fetch robot JavaScript file is converted from the Fetch URDF description for ROS. A similar process was also done for the Baxter URDF description.

ROS uses a different default coordinate system than threejs, which needs to be taken into account in the FK computation for these three robots. ROS assumes that the Z, X, and Y axes correspond to the up, forward, and side directions, respectively. In contrast, threejs assumes that the Y, Z, and X axes correspond to the up, forward, and side directions. The variable robot.links_geom_imported will be set to true when geometries have been imported from ROS and set to false when geometries are defined completely within the robot description file. You will need to extend your FK implementation to compensate for the coordinate frame difference when this variable is set to true.

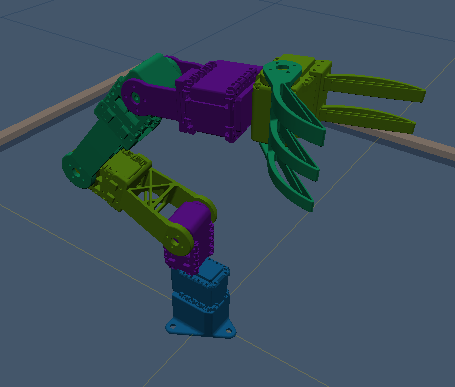

A proper implementation for fetch.urdf.js description should produce the following (shown with joint axes highlighted):

The "robots/sawyer/sawyer.urdf.js" example should produce the following:

Students are highly encouraged to port URDF descriptions of real world robot platforms into their code. Such examples of real world robot systems include the Kinova Movo, NASA Valkyrie and Robonaut 2, Boston Dynamics Atlas, Universal Robots UR10, and Willow Garage PR2.

The following KinEval-compatiable robot descriptions were created by students in past offerings of the AutoRob course. These descriptions are available for your use:

Boston Dynamics Atlas by yeyangf

Agility Robotics Cassie by mungam

Universal Robots UR10 by chengyah

Advanced Extensions

Of the possible advanced extension points, two additional points for this assignment can be earned by generate a proper Denavit-Hartenberg table for the kinematics of the Fetch robot. This table should be placed in the "robots/fetch" directory in the file "fetchDH.txt".

Of the possible advanced extension points, three additional points for this assignment can be earned by implementing LU decomposition (with pivoting) routines for matrix inversion and solving linear systems. These functions should be named "matrix_inverse" and "linear_solve" and placed within the file containing your matrix routines.

Of the possible advanced extension points, three additional points for this assignment can be earned by implementing rigid body transformations as dual quaternions (Kenwright 2012), in addition to the quaternion-based method described in class. Use of dual quaternion transformations must be selectable from the KinEval user interface.

Of the possible advanced extension points, three additional points for this assignment can be earned by implementing rigid body transformations as products of exponentials. Use of matrix exponential transformations must be selectable from the KinEval user interface.

Project Submission

For turning in your assignment, push your updated code to the master branch in your repository.

Assignment 4: Robot FSM Dance Contest

Due 4:00pm, Monday, March 11, 2024

Executing choreographed motion is the most common use of current robots. Robot choreography is predominantly expressed as a sequence of setpoints (or desired states) for the robot to achieve in its motion execution. This form of robot control can be found among a variety of scenarios, such as robot dancing (video below), GPS navigation of autonomous drones, and automated manufacturing. General to these robot choreography scenarios is a given setpoint controller (such as our PID controller from Pendularm) and a sequence controller (which we will now create).

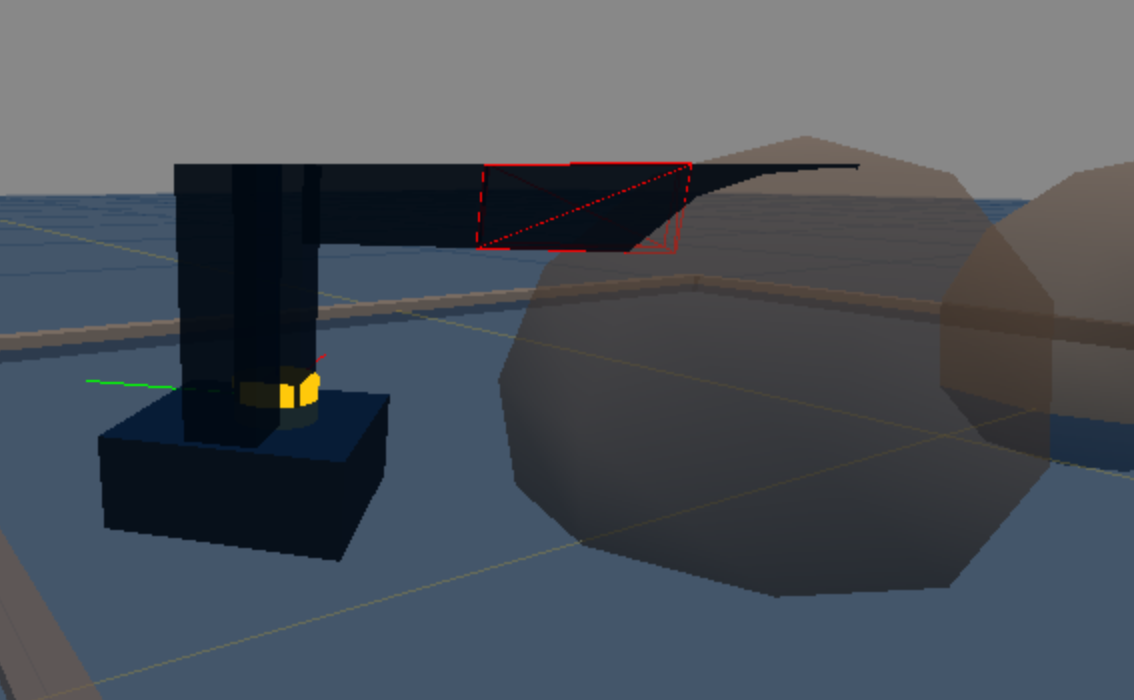

For this assignment, you will build your own robot choreography system. This choreography system will enable a robot to execute a dance routine by adding motor rotation to its joints and creating a Finite State Machine (FSM) controller over pose setpoints. Your FK implementation will be extended to consider angular rotation about each joint axis using quaternions for axis-angle rotation. The positioning of each joint with respect to a given pose setpoint will be controlled by a simple P servo implementation (based on the Pendularm assignment). You will implement an FSM controller to update the current pose setpoint based on the robot's current state and predetermined sequence of setpoints. For a single robot, you will choreograph a dance for the robot by creating an FSM with your design of pose setpoints and an execution sequence.

This controller for the "mr2" example robot was a poor attempt at robot Saturday Night Fever (please do better):

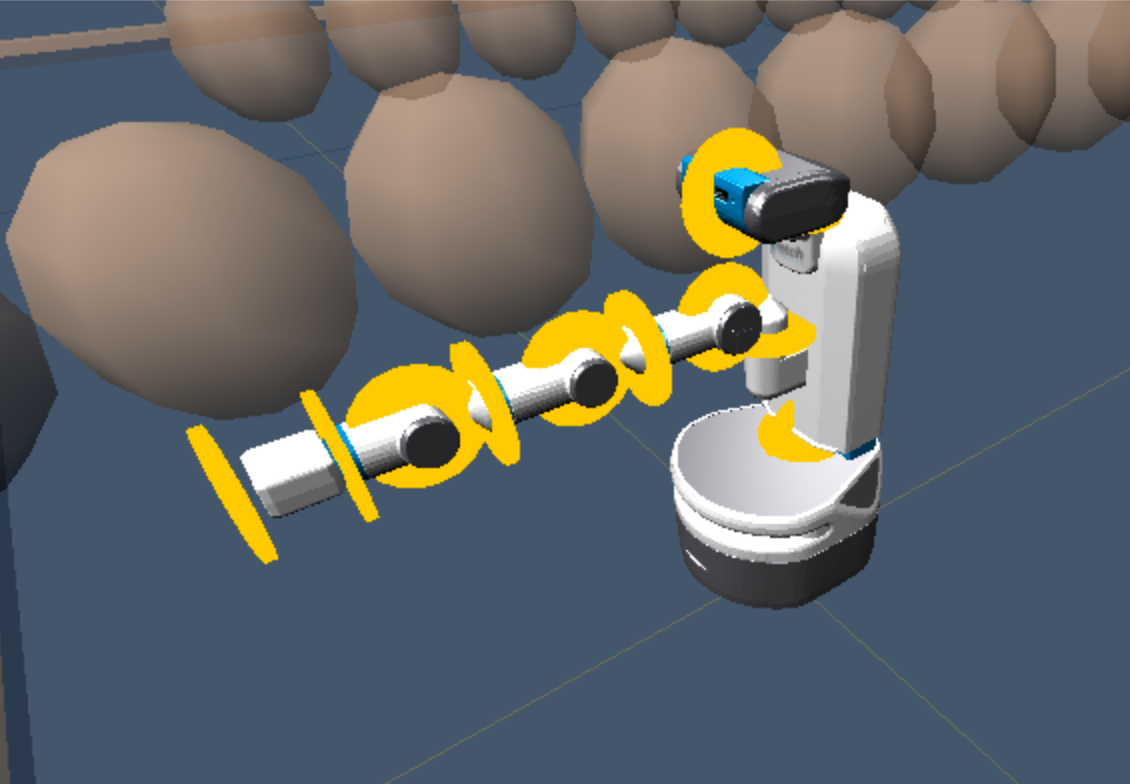

This updated dance controller for the Fetch robot is a bit better, but still very far from optimal:

Features Overview

This assignment requires the following features to be implemented in the corresponding files in your repository:

-

Quaternion joint rotation in "kineval/kineval_quaternion.js" (for quaternion functions) and "kineval/kineval_forward_kinematics" (to add axis-angle joint rotation to existing kinematic traversal)

-

Interactive base control vectors in "kineval/kineval_forward_kinematics.js"

-

Pose setpoint controller in "kineval/kineval_servo_control.js"

-

Dance FSM in "kineval/kineval_servo_control.js" (FSM controller) and "home.html" (dance setpoint initialization)

-

[Grad section only] Joint limit enforcement in "kineval/kineval_controls.js"

-

[Grad section only] Prismatic joint implementation in "kineval/kineval_forward_kinematics.js"

-

[Grad section only] Fetch rosbridge interface

Points distributions for these features can be found in the project rubric section. More details about each of these features and the implementation process are given below.

Joint Axis Rotation and Interactive Joint Control

Going beyond the joint properties you worked with in Assignment 3, each joint of the robot now needs several additional properties for joint rotation and control. These joint properties for the current angle rotation (".angle"), applied control (".control"), and servo parameters (".servo") have already been created within the function kineval.initRobotJoints(). The joint's angle will be used to calculate a rotation about the joint's (normal) axis of rotation vector, specified in the ".axis" field. To complete an implementation of 3D rotation due to joint movement, you will need to first implement basic quaternion functions in "kineval/kineval_quaternion.js" then extend your FK implementation in "kineval/kineval_forward_kinematics.js" to account for the additional rotations.

If joint axis rotation is implemented correctly, you should be able to use the 'u' and 'i' keys to move the currently active joint. These keys respectively decrement and increment the ".control" field of the active joint. Through the function kineval.applyControls(), this control value effectively adds an angular displacement to the joint angle.

Interactive Base Movement Controls

The user interface also enables controlling the global position and orientation of the robot base. In addition to joint updates, the system update function kineval.applyControls() also updates the base state (in robot.origin) with respect to its controls (specified in robot.controls). With the support function kineval.handleUserInput(), the 'wasd' keys are purposed to move the robot on the ground plane, with 'q' and 'e' keys for lateral base movement. In order for these keys to behave properly, you will need to add code to update variables that store the heading and lateral directions of the robot base: robot_heading and robot_lateral. These vectors need to be computed within your FK implementation in "kineval/kineval_forward_kinematics.js" and stored as global variables. They express the directions of the robot base's z-axis and x-axis in the global frame, respectively. Each of these variables should be a homogeneous 3D vector stored as a 2D array.

If robot_heading and robot_lateral are implemented properly, the robot should now be interactively controllable in the ground plane using the keys described in the previous paragraph.

Pose Setpoint Controller

Once joint axis rotation is implemented, you will implement a proportional setpoint controller for the robot joints in function kineval.robotArmControllerSetpoint() within "kineval/kineval_servo_control.js". The desired angle for a joint 'JointX' is stored in kineval.params.setpoint_target['JointX'] as a scalar by the FSM controller or keyboard input. The setpoint controller should take this desired angle, the joint's current angle (".angle"), and servo gains (specified in the ".servo" object) to set the control (".control") for each joint. All of these joint object properties are initialized in the function kineval.initRobotJoints() in "kineval/kineval_robot_init_joints.js". Note that the "servo.d_gain" is not used in this assignment; it is for advanced extensions.

Once you have implemented the control function described above, you can enable the conroller by either holding down the 'o' key or selecting 'persist_pd' from the UI. With the controller enabled, the robot will attempt to reach the current setpoint. One setpoint is provided with the stencil code: the zero pose, where all joint angles are zero. Pressing the '0' key sets the current setpoint to the zero setpoint.